🔮 Exponential View #561: Token scaling; frontier revenues; Spotify engineers; century bonds, T-cells vs Alzheimer’s & glass replaces silicon++

Hi all,

Welcome to the Exponential View Sunday briefing. Today’s edition has one message: update your priors.

Let’s go!

Zooming out on intelligence

This week, I used 97 million AI tokens in a single day. Three years ago, I was using about 1,000 tokens/day, a novelty. Then 10,000, AI became my tool. At 100,000, a collaborator. At 1 million, I was building workflows. At 10 million, processes. At nearly 100 million – something closer to a workforce. In this weekend’s essay, I map what happens at each order of magnitude – and why the next zero will force us to confront a harder question: what we are prepared to delegate?

Pricing the unprecedented

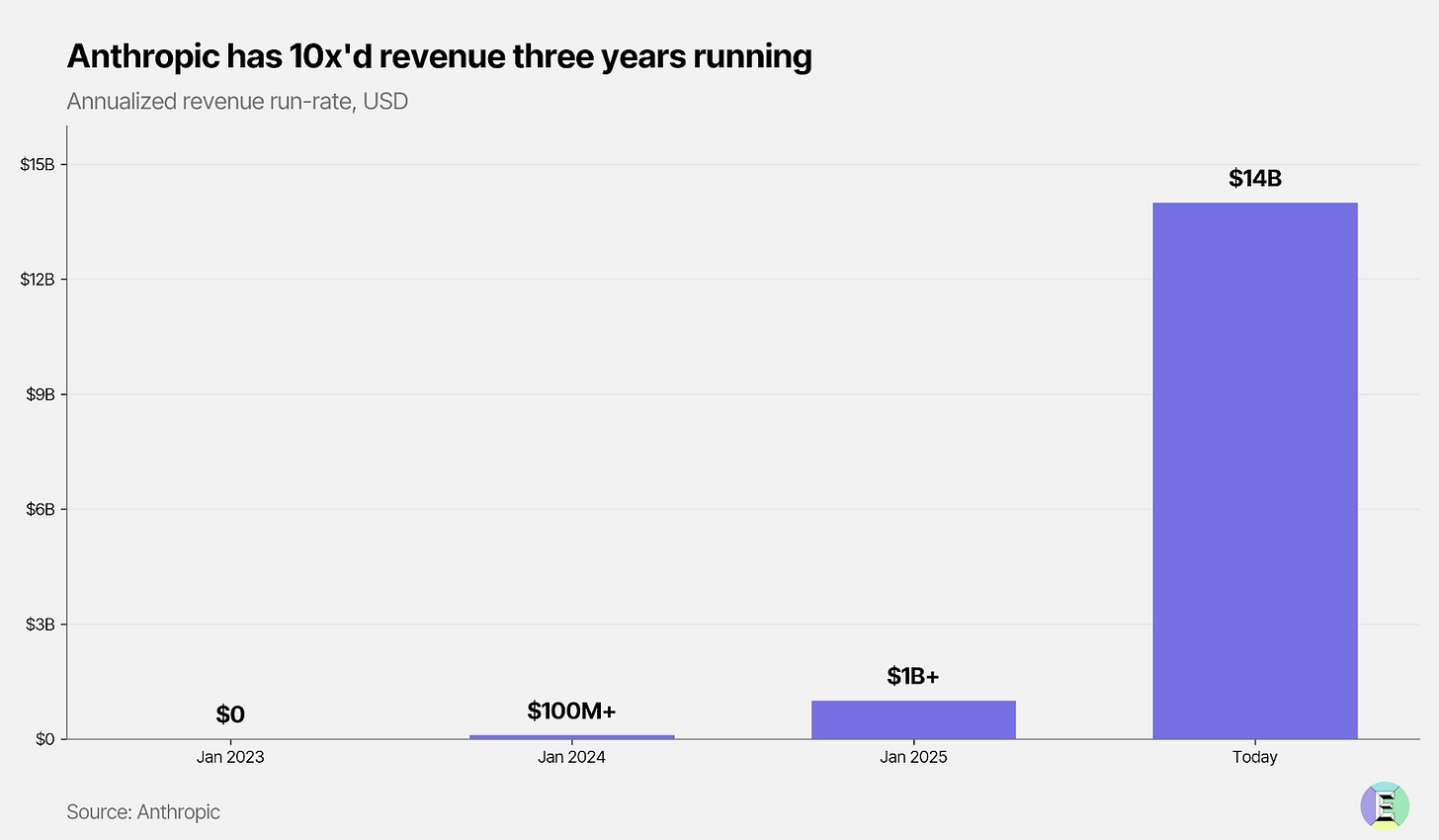

Anthropic’s latest numbers are astonishing. The firm's revenues grew roughly tenfold in each of the last three years, reaching a $14 billion annualised run rate – about 900% growth per year, sustained for three consecutive years. 900%.

OpenAI grew 250% in 2025, still impressive. But it’s worth noting they started 2025 with monthly revenues about six times higher than Anthropic’s, and ended it about fifty percent bigger. This is the impact of Anthropic’s focused enterprise strategy. Claude Code has, alone, become a $3 billion business and doubled in January this year alone. At Exponential View, Anthropic’s models account for the majority of our API usage. Gemini does some heavy lifting, followed by DeepSeek. Very little OpenAI.1

And yet, we have a company which is growing at 250% while on a $20 billion revenue tear.

The obvious question is whether growth at anything like this pace can persist. Last year, OpenAI projected $145 billion in revenue by 2029. We looked at the numbers – mathematically possible, but not likely.

The bear case for OpenAI is, thus, boringly easy.

But here is the counterargument. Models are models and we’re seeing some users (like me) swap quickly but others (especially enterprises) won’t – and OpenAI has brand awareness and an enterprise footprint that will pay dividends.

What about open-source? Consider MiniMax’s new M2.5 model, for instance, which claims frontier-level performance at $1/hour – won’t that win API business from OpenAI? Perhaps, but the market is expanding quickly. And closed-source models will retain some technical edge while also being wrapped in the service and support that open models lack. And I reckon that for the most important tasks, the really high value ones, firms will pay a lot and that closed, proprietary models will keep their edge here.

OpenAI has obviously had a tumultuous six months. And Anthropic, which has performed so well on the back of consistent messaging, progressively better products and far less self-administered hype, has had a roaring 2025. My view: OpenAI stumbled rather publicly, but it’s too early to draw conclusions. And the same goes for Anthropic, despite its more consistent acceleration.

See also:

OpenAI launched GPT-5.3-Codex-Spark. 15x faster code generation and the first OpenAI model running on Cerebras chips, not Nvidia.

Amazon engineers are rejecting Amazon’s own coding tool, petitioning instead for Claude Code.

Anthropic pledges to cover electricity price increases caused by their data centers.

A MESSAGE FROM OUR SPONSOR

Startups move faster on Framer

First impressions matter. With Framer, early-stage founders can launch a beautiful, production-ready site in hours — no dev team, no hassle.

Pre-seed and seed-stage startups new to Framer will get:

One year free: Save $360 with a full year of Framer Pro, free for early-stage startups.

No code, no delays: Launch a polished site in hours, not weeks, without technical hiring.

Built to grow: Scale your site from MVP to full product with CMS, analytics, and AI localization.

Join YC-backed founders: Hundreds of top startups are already building on Framer.

To sponsor Exponential View in Q2, reach out here.

Scaling the employee

Spotify co-CEO Gustav Söderström disclosed on Wednesday’s earnings call that the company’s top developers have not written a line of code since December. They direct an internal AI system called Honk, built on Claude Code, which ships features while engineers review output from their phones.

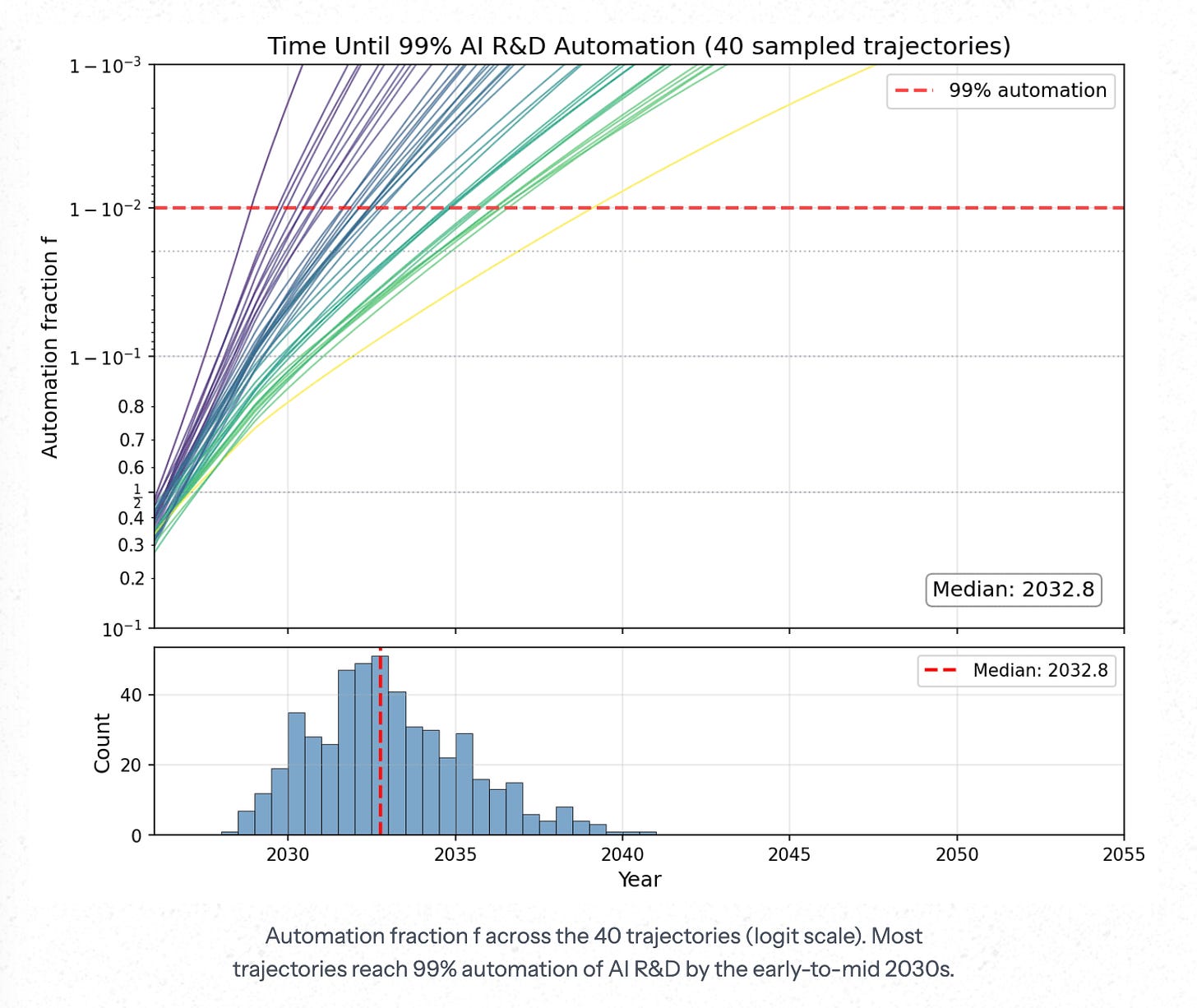

METR’s Thomas Kwa published a model this week projecting almost-full automation of AI R&D around 2032 . The model’s central constraint is diminishing returns: each additional improvement in AI coding capability becomes progressively harder to achieve. The timeline to full automation depends on how steep those diminishing returns are; if they are strong, progress could stretch out by years.

These two facts are worth holding together. The Spotify update is about AI writing adequate code fast enough that the human role shifts from production to specification and judgement – a shift we identified and dissected on multiple occasions (see How soon will AI work a full day? or Judgement is the talent bottleneck or my conversation with Ben Zweig).

But this shift does not mean less work. Research from UC Berkeley tracked AI-augmented employees over eight months and found that productivity rose but so did voluntary workload. AI made it easier to start and complete tasks, so workers absorbed more of them. The tools expand the scope of work faster than they compress it. The Spotify engineer reviewing AI output from their phone on the morning commute is not relaxing.

What looks like higher productivity in the short run can mask silent workload creep and growing cognitive strain as employees juggle multiple AI-enabled workflows. Because the extra effort is voluntary and often framed as enjoyable experimentation, it is easy for leaders to overlook how much additional load workers are carrying.

And the friction that might naturally cap this cycle is disappearing. OpenClaw has unlocked software that modifies itself, it maintains a patchwork of markdown notes as pseudo-memory. When I tell it I dislike a formatting choice, it writes that preference down. Next session, it remembers. Like Guy Pearce in Memento, leaving notes for a future self that starts fresh each morning2.