🔮 AI scaling constraints; importing sunshine; cognitive capital & AI; startup trap, geo engineering & Roblox ++ #488

Hi, it’s Azeem. Welcome to our Sunday newsletter where I help you ask the right questions about technology and the near future. For more, see Top posts | Book | Follow me on Notes

Ideas of the week

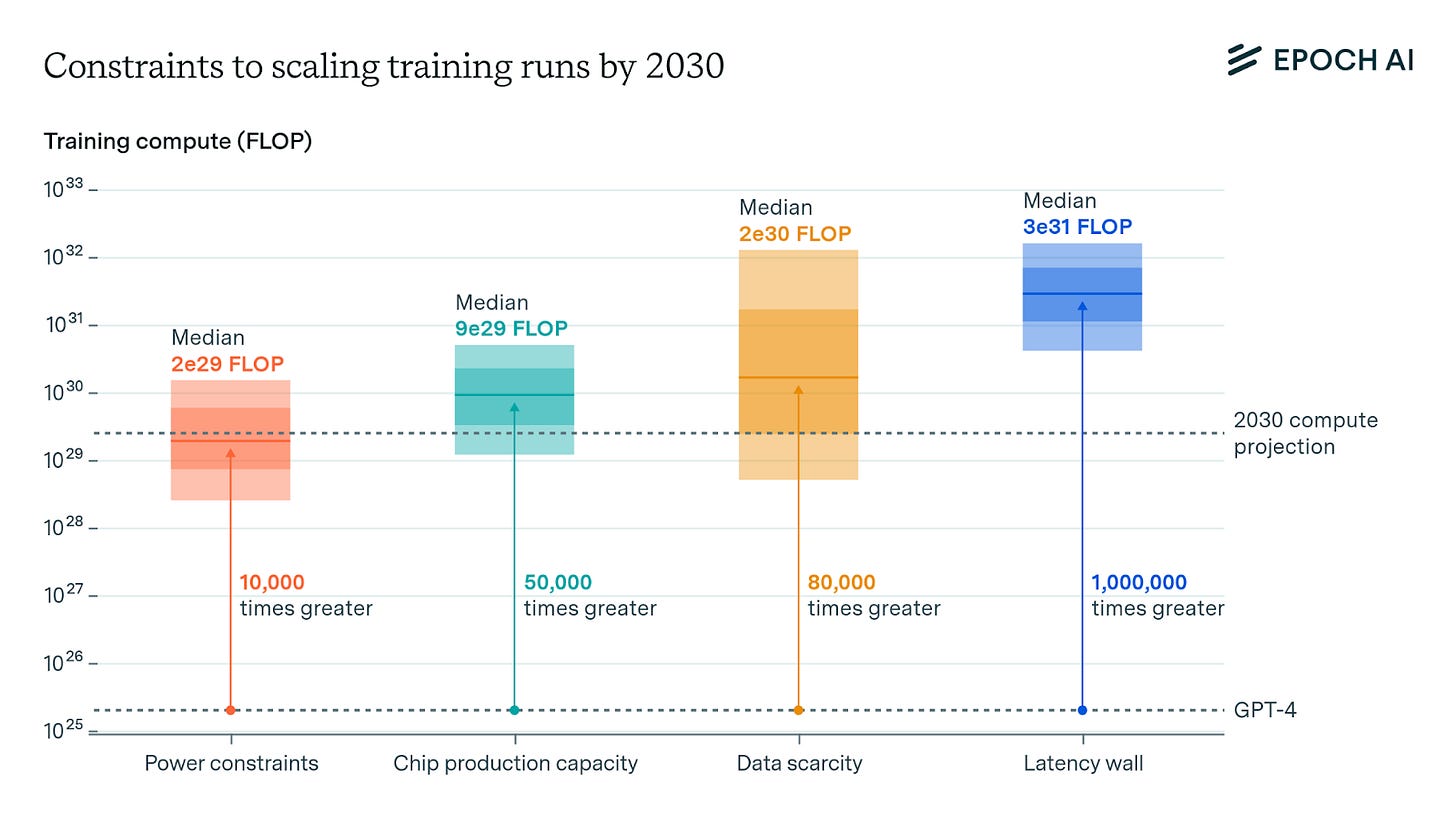

Asymptotic ascent? I recently argued that a colossal $100 billion AI model could materialise by 2027 and that scaling beyond this is less certain. Epoch AI’s research supports this trajectory, forcasting AI training compute to hit 2e29 floating-point operations per second by 2030 – a scale that would require hardware costing roughly $250 billion. For comparison, that’s over five times Microsoft’s current annual capex1. This study finds no insurmountable technical barriers, despite high uncertainty. Power availability (a US distributed network could likely accommodate 2GW to 45GW in 2030) and chip production pose challenges but aren’t showstoppers. Data scarcity and computational latency are less constraining, though data scarcity estimates span four orders of magnitude (high uncertainty). The real limit is economics. Will companies invest $250 billion for incremental large language model improvements? Our willingness to justify massive investments may be the ultimate constraint.

The most important technology. I expect that GLP-1 agonists are going to have a more meaningful impact on the Western economies and society than AI, renewables and other exponential technologies in the next two years. I outline the case for GLP-1s and future scenarios here:

🔮 Today’s most important technology

GLP-1 agonists, led by brands like Ozempic and Mounjaro, have sparked a global phenomenon far beyond their original purpose. As prescriptions quadruple and waiting lists grow, these drugs are poised to reshape not just waistlines, but entire economies.

Can sunshine be imported? For energy-hungry Singapore, the answer may soon be yes.