🔮 Ten charts to understand the Exponential Age

This week marks the 500th edition of the Sunday newsletter. My aim all along has been to show that we live in extraordinary times.

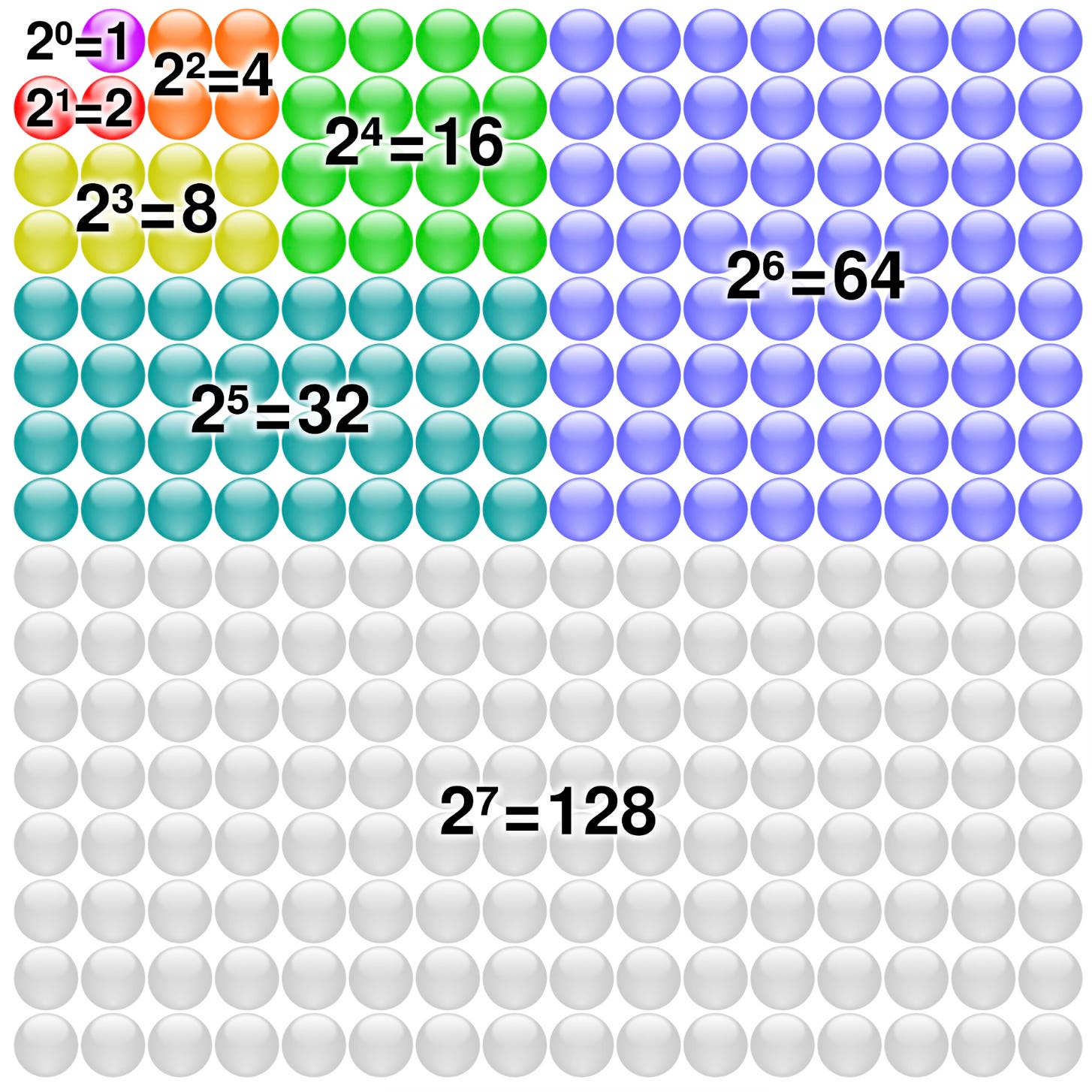

As I mentioned yesterday, I sent the first edition to 20 friends in 2015 and called it Square 33. This was a nod to the chessboard-rice analogy of exponential growth. The first 32 squares on a chessboard involve relatively modest numbers that don’t feel world-changing. But crossing into the 33rd square launches us into the numbers that seem incomprehensible.

We are in square 33.

In today’s retrospective edition, we’ll step back to explore the building blocks of how we understand the Exponential Age. Let’s go!