🔮 Exponential View #557: Starlink, Iran & abundance; AI’s exploration deficit; AGI vibes, aliens, Merge Labs & regulating companions++

Your Sunday briefing on AI and exponential technologies

“I love the sharpness of your insights and perspectives.” — Satya C., a paying member

Good morning from Davos. I got into Switzerland yesterday for a week of meetings and, if past years are any guide, a lot of conversations about AI. As in previous years, I’ll be checking in live most days from the Forum to share what I’m hearing behind the scenes.

And now, let’s get into today’s weekend briefing…

An antidote to forced scarcity

Iran’s regime tried to cement control with a near‑total internet shutdown. Even so, Starlink, smuggled in after sanctions eased in 2022, gave citizens a channel the state couldn’t fully police. The regime has tried to block Starlink by jamming GPS, but as always with anything on the internet, there are workarounds. It created real capacity for coordination, even if a full‑scale revolution didn’t follow.

Something similar happened to energy in Pakistan. In 2024, solar panel imports hit 22 gigawatts, nearly half its total generation capacity of 46 GW in 2023 (see our analysis here). Consumers opted out of a grid charging 27 rupees per kWh; rooftop solar comes in at roughly one‑third of that.

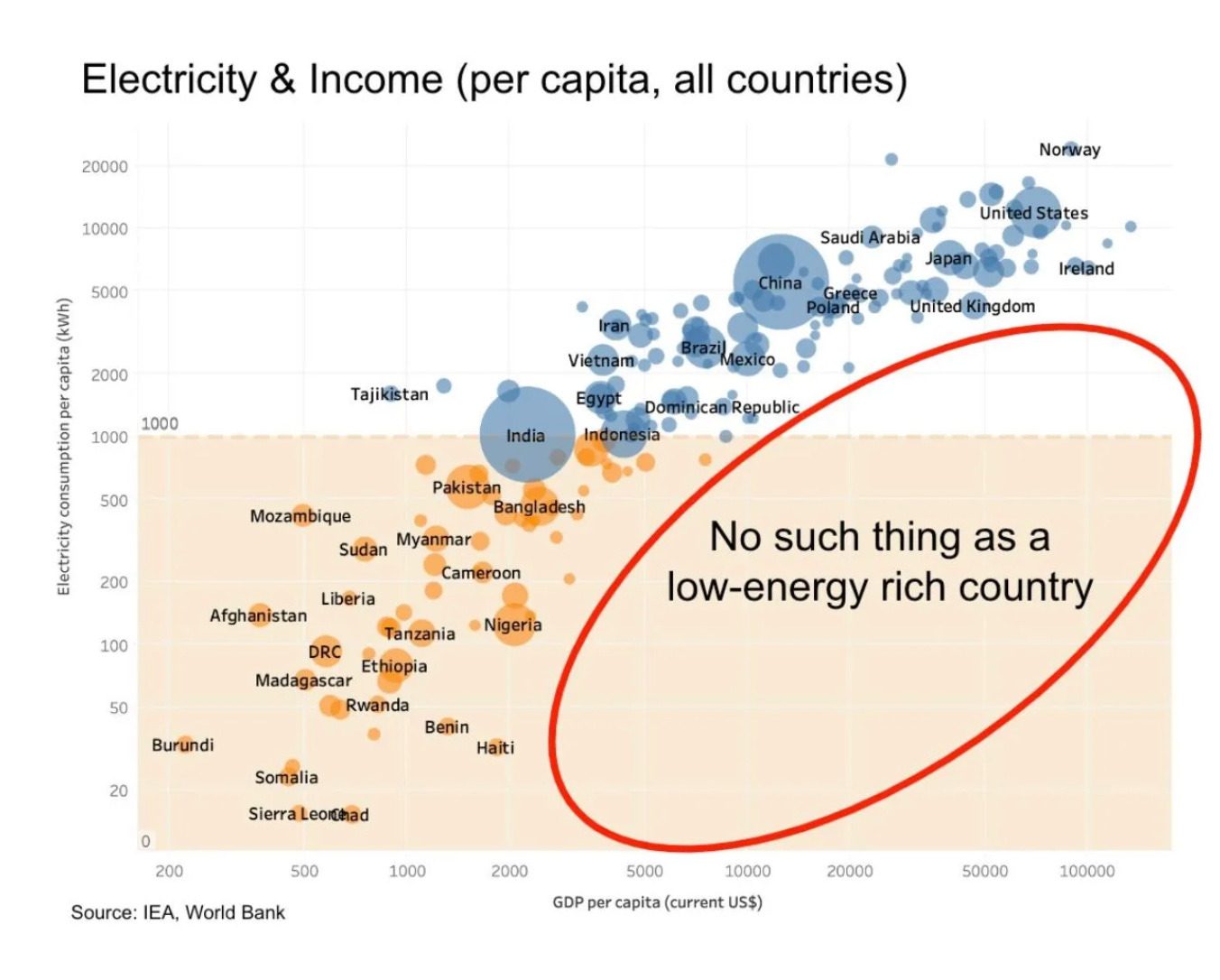

In both cases – authoritarian or merely dysfunctional – centralized infrastructure created demand for decentralized workarounds: solar panels in Lahore and Starlink dishes in Tehran. In corrupt or dysfunctional systems, power usually flows from dependency, not from legitimacy. Control the grid, control information, control money and compliance follows. When hardware breaks that dependency, only legitimacy remains – and these states have little. Energy, intelligence and coordination underpin growth; two of the three are now slipping beyong centralized control.

Escaping the explore-exploit trap

Researchers who adopted AI, tracked across 41 million publications in a Nature study, published three times more papers and received 4.8 times more citations. The trade-off is a 4.6% contraction in topics studied and a 22% drop in researcher collaboration.

AI pushes work toward data‑rich problems, and some foundational questions where data is sparse, go unexplored. We face an exploration deficit where AI will do well at exploiting what we already know, but it is eroding the incentive to discover what we don’t. Four other autonomous research attempts show that models excel at literature synthesis but fail at proposing experiments that could falsify their own hypotheses or identifying which variables to manipulate next.

My own experience hits a related wall. I’ve used AI tools extensively for research on my new book; it’s excellent at spotting cross‑field patterns. But safety theatre has hobbled them. When I test plausible tech scenarios with well‑understood trends, models smother answers in caveats for every stakeholder — permitting bodies, developing economies, future generations, and so on. The labs bake this in during post-training through reinforcement learning, to optimize for hedge and cover. That’s the opposite of exploration. So we have tools that synthesise brilliantly, if bureaucratically, but flinch from the leaps that research needs.

As in scientific labs, so in offices, with the possible decline in junior employment1. Companies chase payroll savings, but are they also cutting off the pipeline to expertise? Anthropic’s latest data suggests that AI succeeds at about 66% of complex tasks; and the remaining 34% falls into “O-ring” territory, where one weak link causes the entire system to fail.

Execution is very cheap right now, as I wrote last week. And the remaining bottleneck tasks will likely be strategic judgment, context integration and error correction. In other words, the more we automate routine work, the higher the premium on precise human expertise. Yet by eliminating the junior roles where that expertise is forged, corporations are dismantling the supply line for the very resource they need most.

I unpack this and other trends with Anthropic’s Peter McCrory (dropping next week on YouTube and podcast platforms).

Feel the AGI yet?

Sequoia Capital, the tony Silicon Valley investor, says that AGI is already here, defining it simply as “the ability to figure things out”. The near‑term future they describe is already showing up in my day‑to‑day (see my essay on The work after work):

The AI applications of 2026 and 2027 will be doers. They will feel like colleagues. Usage will go from a few times a day to all-day, every day, with multiple instances running in parallel. Users won’t save a few hours here and there – they’ll go from working as an IC to managing a team of agents.

By that standard, long-horizon agents such as Claude Code would already qualify. The models provide the brain and the scaffolding – memory, tools and decision-making – lets them act. Together, they can figure out a great deal.

As long-time readers know, I think the term “AGI” is too blurry to be meaningful, let alone carry much practical weight. Unburdened by that term, Sequoia is pointing out something many of us feel. We can expand our cognitive capacity for mere dollars a day. It feels like a watershed.

This week, Cursor used more than 100 agents to build a browser with three million lines of code, parsing HTML, CSS and JavaScript in parallel. It did so for an estimated $10,000 in API calls2. It will not replace Chrome, but it also does not require hundreds of millions of dollars.

The bigger question for 2026 for me is whether the emerging capability will translate across domains.