🔮 Exponential View #546: Capex is driving the energy transition. AI progress needs ingenuity. Xi's scared of pessimism++

A weekly briefing on AI and exponential technologies

Good morning!

Grid-scale batteries like these are rewriting the logic of the energy system. In California, they already supply more than a quarter of peak summer demand and have cut gas generation by 37% since 2023. They may scale faster because of AI.

In today’s briefing:

How AI is becoming the accidental accelerator of the energy transition,

What replaces scale as the driving force of AI progress,

How China is tightening control over pessimism,

But first: AI boom, bust… or a third way?

Boom or bust. Is there a third way?

Last month, we laid out one of the most rigorous frameworks yet for assessing whether artificial intelligence is in a bubble. It struck a chord because it was measured and thoughtful. (And because it isn’t clickbait, please take a moment to share it.)

This week I was on Derek Thompson’s podcast to discuss the research and the five gauges we’re tracking to know what’s going on. In the course of the conversation, I offered a “third door”, a scenario in which the AI boom turns into a bust… and that’s not necessarily a bad thing:

In a funny way, we might be grateful for it. Of course, there will be stock market prices going down, but what would have happened is that there will be a lot of GPU infrastructure, computing infrastructure that organisations with less money could pick up at fire sale prices. And those assets will go to smaller players who might have newer approaches. They may prefer open-source, they may decide they don’t want to chase after the machine god. They may decide that pricing needs to be more sensible. We might even see faster innovation alongside democratisation.

When the dotcom boom exploded, it didn’t affect the real economy much. The US didn’t fall into recession but kept growing. The housing bust really hurt.

If an AI bust happened, it feels more like the dotcom than housing. In fact, more so, because right now alternative approaches to AI are likely being crowded out by the “supermajors.” A bust might widen the breadth of innovation and the nature of deployment in ways that could ultimately feel more beneficial than our current trajectory.

You can listen to our conversation here.

See also:

Meta and Blue Owl are striking what is likely the largest private-capital deal ever in tech: nearly $30 billion in a special purpose vehicle to build a hyperscale data center. Meta would retain just 20% ownership and offload the balance to Blue Owl. I explain what it might mean here.

Rethinking progress & AGI

For much of the 2010s, AI progress followed a sort of a rule that more compute means bigger models means better performance.

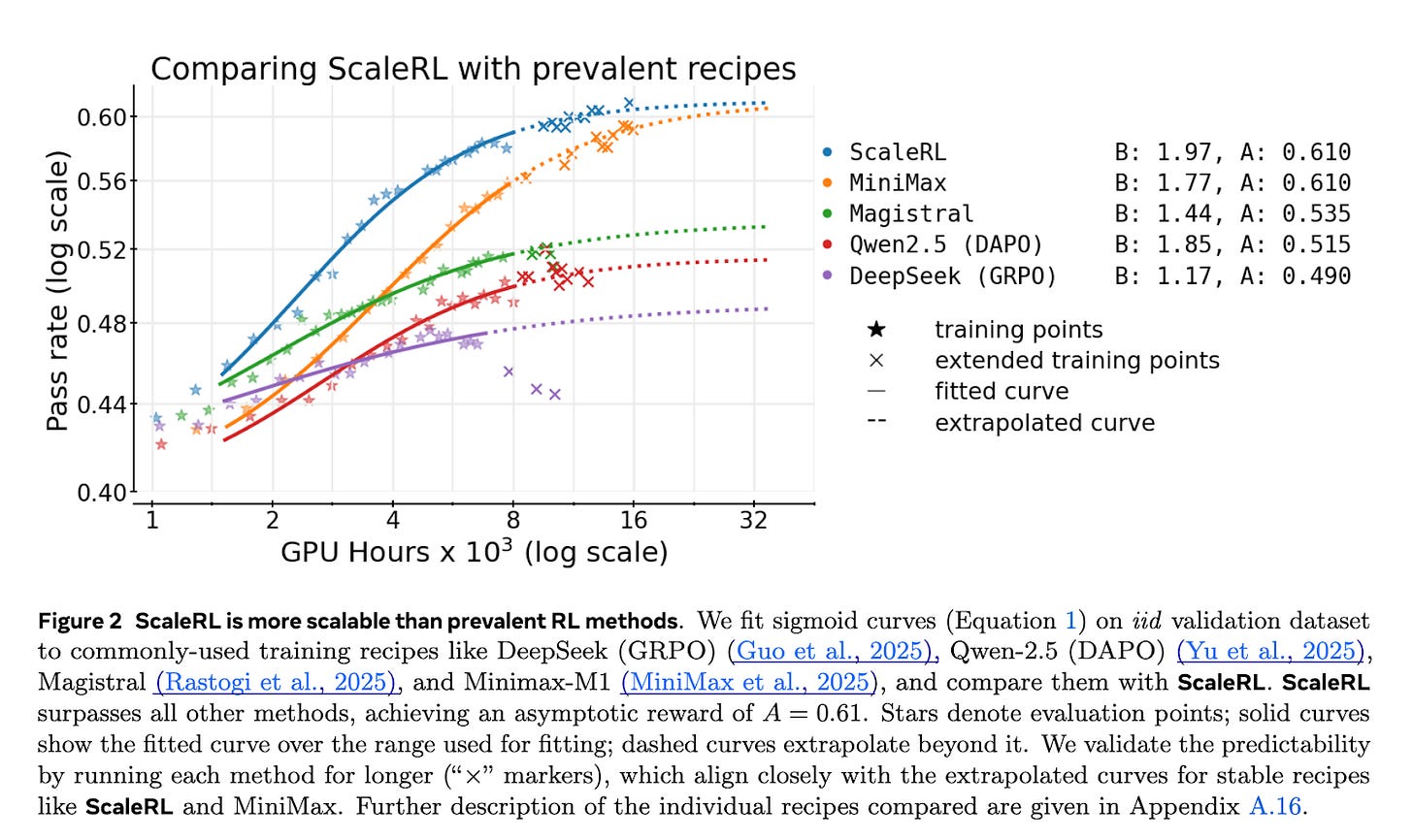

But by late 2024, the frontier labs found that this no longer held as cleanly. Models like GPT-4.5 were met with lackluster reaction – the increased performance was there but model size made it more expensive and slow. Scaling, or pre-training scaling, to be precise, seemed to have hit a wall in practical terms. At the same time, another emerging domain, scaling reinforcement learning (RL), was delivering exceptional performance gains. RL is prompting an LLM to answer, judge its own accuracy, and learn from the result. This loop powered the performance leaps of OpenAI’s o1 model and DeepSeek R1. So we got to a new paradigm in AI progress but there was a question of whether scaling still applied?

In a new paper this week, researchers find that RL doesn’t follow an open-ended power law like pre-training. Instead, it traces a sigmoidal, S-shaped, curve.

The main bottleneck in AI has moved from raw computational power to the “method” – how we train and adapt models. As a result, progress has become less calendar-predictable and budget-dependent and rather dependent on conceptual breakthroughs. Now is the time for ingenuity, for recalibration.

We’ve known for a while that certain domains remain stubbornly hard for AI. A group of (serious) AI researchers this week formalized this into a new definition of AGI: an AI that can match or exceed the cognitive versatility and proficiency of a well-educated adult.

I love the ambition. I love the practicality – it provides a diagnostic frame to help research roadmaps. And they resist the urge to turn AI into purely an economic endeavour. But there are two drawbacks to address.