🔮 The Sunday edition #513: GPT-4.5; transition or edition; physical limits of AI; 3D-printed fish, superbabies & data on the moon ++

An insider’s guide to AI and exponential technologies

Hi, it’s Azeem.

This week, GPT-4.5 arrives with an identity crisis – it shows 18-point improvements on graduate-level benchmarks but at 20 times the price of GPT-4o. As models grow hungrier for resources, innovation takes unexpected forms. Lonestar is sending a tiny data center to the moon, using the lunar surface’s -173°C environment for free cooling. Meanwhile, Andrej Karpathy reframes our understanding of AI itself, equating LLMs to CPUs and tokens to bytes. Let’s dig in!

Lifespan technologies

Four years ago, Steve Hsu argued that machine learning, combined with rapidly declining genome sequencing costs, would revolutionise how we approach genetic modification. Genetic technologies we have today – which are improving all the time – could extend lifespan, strengthen immune systems and boost IQ by four to five points per generation through embryo selection and direct gene editing. Writing on LessWrong, gene editing startup founder Gene Smith breaks down research in favor of large-scale, heritable editing of human germline to improve health outcomes and lifespan. The post is worth reading in full, here’s an extract:

[T]he effect we can have on [common disease variants] with editing is incredible. Type 1 diabetes, inflammatory bowel disease, psoriasis, Alzheimer’s, Parkinson’s, and multiple sclerosis can all be virtually eliminated with less than a dozen changes to the genome.

[...]

But what if we could go after 5 diseases at once? Or ten? What if we stopped thinking about diseases as distinct categories and instead asked ourselves how to directly create a long, healthy life expectancy? In that case we could completely rethink how we analyze the genetics of health. We could directly measure life expectancy and edit variants that increase it the most.

Most mainstream science remains hesitant to engage too deeply in genetic modification because it fears theoretical ethical repercussions. Things will happen regardless of the academic community for better or for worse – so it’s important that we have more open-minded conversations about all of this. If we have the potential to improve future generations' wellbeing through genetic means, should we?

GPT4.5’s identity crisis

Grok 3’s release last week showed that there’s still room for scaling AI models. GPT-4.5, released this week, supports this view, but there’s a catch. Many in the industry are underwhelmed despite significant gains – about an 18-percentage-point improvement over GPT-4o on graduate-level benchmarks.

These gains come with a staggering 20 times the price increase over GPT-4o. When calculating ROI, are these modest improvements worth such an exponential price increase?

No one is quite sure what GPT-4.5 is best used for in practical applications. Meanwhile, Anthropic’s Claude 3.7 Sonnet, also released this week, is developing a clear identity. By targeting software engineering specifically, Sonnet outperformed competitors on SWE-bench by a decisive 20-percentage-point margin. Claude is becoming the go-to model for coding tasks.

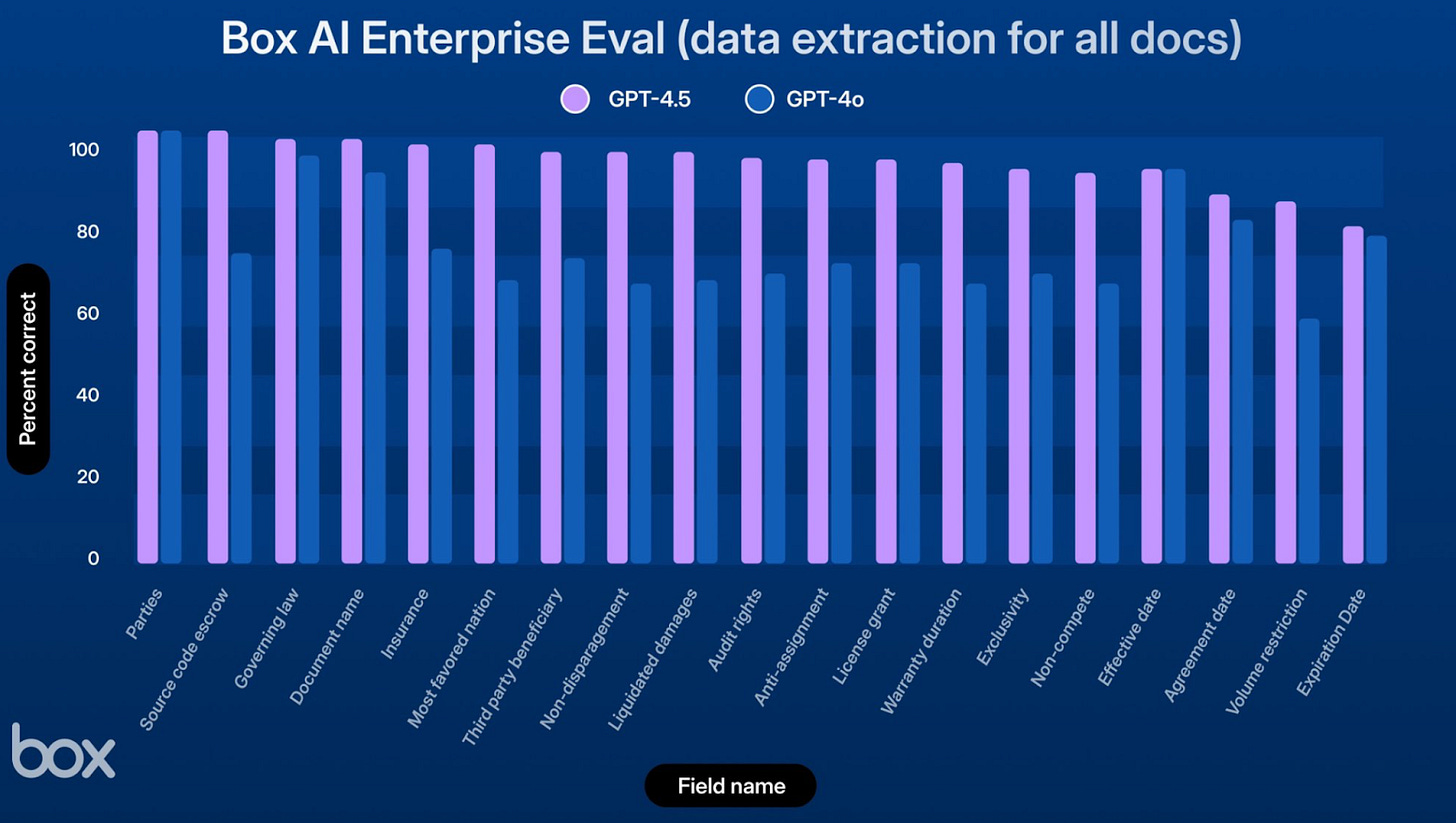

GPT-4.5 excels at something more intangible and subjective. It hallucinates less and has a better “intuition”. Andrej Karpathy highlights that it particularly shines in tasks that are difficult to measure on benchmarks, such as creativity, emotional intelligence, humor and deep world understanding. Examples of this translating into business value are isolated, but I did come across Box CEO Aaron Levie noting a “19-point improvement” in accurately extracting data fields from enterprise content.

Are we entering a new phase where AI models operate on three different product trajectories? The slowest clock speed is the build-out of massive generalized large models (like GPT 4.5, 5 or Claude 4). These may show up every 2-3 years. The second will be models based on “inference time compute”, the reasoners like o1 and R1. Their capabilities might be improved as the quality of the underlying models improves. The release cadence here can be faster. And finally, we’ll see a rich ecology of fine-tuned models for vertical applications which, for their niche, will be far ahead of the state of the art.

See also:

Amazon announced that Claude will power its next-generation Alexa+ assistant – finally, the Alexa we have been waiting for years.

Claude also released Claude Code, a terminal-based coding agent that integrates directly into developer workflows.

Inception Labs launched the first commercial-grade diffusion LLM.

Solving the physical limits

Jensen Huang has suggested that reasoning models demand 100 times more compute than traditional ones, with future needs potentially millions of times higher.

We can always ask these models to think harder, think longer, through a problem. Such ratios aren’t completely the stuff of dreams. Back in 2015 when I was talking to chip startups, they pointed me to the notion of million-fold increases in compute demand. They weren’t wrong.