🔮 Gemini; silver spooners; greedflation; anthrobots, raves & whales++ #452

An insider's guide to AI and other exponential technologies

Hi, it’s

here with our regular Sunday edition.In today’s edition:

EU AI Act — see my End Note,

Gemini, the new SOTA model. Or is it? 🤨

How to go about cultivating social trust in AI? 🏛️

The NVIDIA advantage keeps on giving 🍿

And more…

But first, our latest posts:

If you’re not a subscriber, here’s what you missed recently:

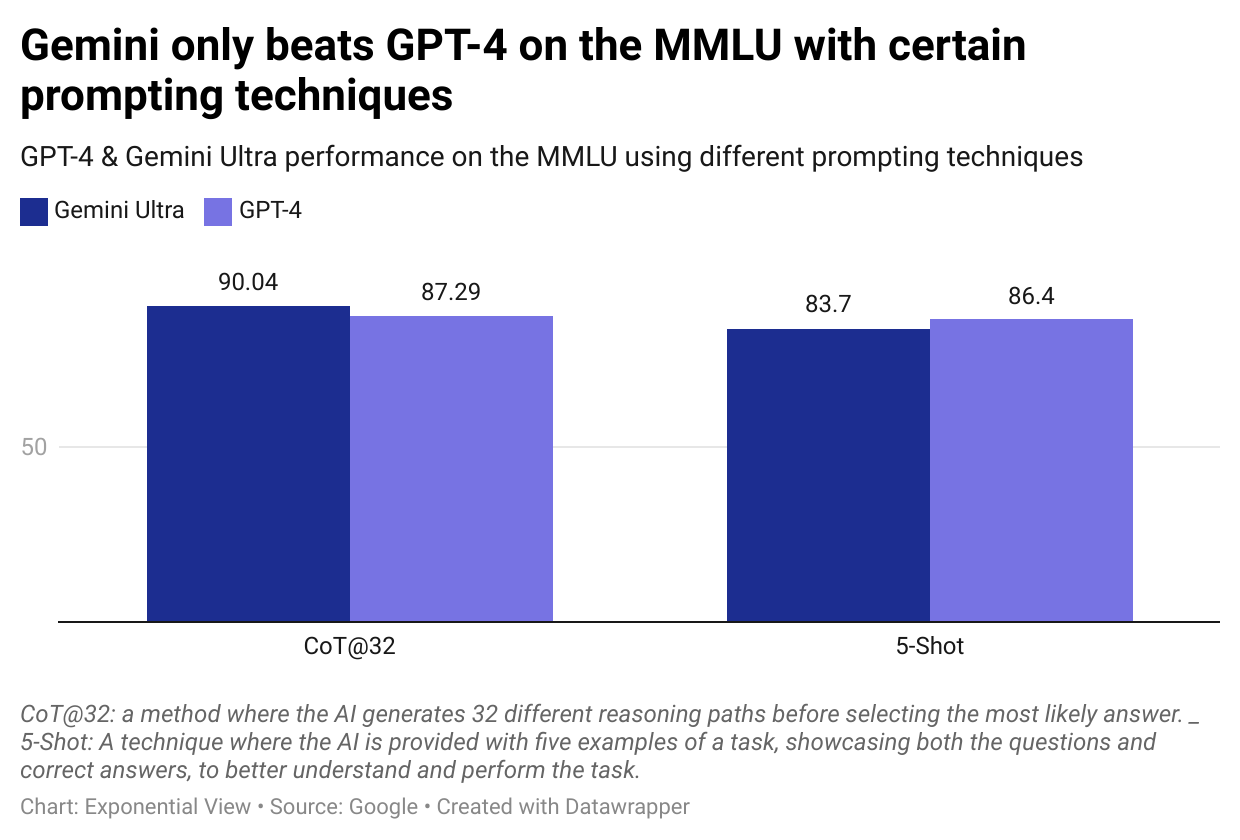

Sunday chart: Gemini, the new SOTA model. Or is it?

The release of ChatGPT set off the starting gun no one expected. As the NYT reports, when the Big Tech firms saw the reception of ChatGPT, they immediately pivoted to developing their own AI products, with minimal care for risks. Meta open-sourced Llama-2, Microsoft added GPT to their products and Google rush-released Bard. It seems like a blind sprint in a race no one fully understood.

This week, the next leg was revealed with the announcement of Google’s Gemini models. They are multi-modal from the ground up, meaning it can reason and understand across modalities, such as text, image, audio and video. For an impressive example of this, see here how Gemini reads, understands and filters 200,000 scientific papers over a lunch break. The largest of the models, Gemini Ultra, looks to be the best model on the market, finally beating GPT-4. It has achieved state-of-the-art (SOTA) results on 30 of the 32 most common research benchmarks, the first model ever to outperform humans on the well-known MMLU benchmark.

But this doesn’t tell the full story. If there is anything telling about how much of an AI race there is, it’s in the marketing tricks that Google used to make its model better than it seems.

Firstly, their MMLU SOTA1 score lacks nuance. It beats GPT-4 using Chain-of-Thought@32 prompting, a method that has the AI generate 32 different reasoning paths for a single question, considering various angles and possibilities, before choosing the most consistent or convincing answer. While this can lead to more nuanced and considered responses, it’s a process that’s more complex and less commonly employed for quick, everyday queries where users prioritise immediate and concise answers. On the other hand, GPT-4 beats Gemini Ultra using the 5-shot method, which involves presenting the AI with five examples of a task — complete with questions and the correct answers — to help it understand what’s expected before posing a new, similar question. This approach is likely closer to how users might naturally give context to help guide the AI towards the kind of response we’re seeking. This highlights some of the limitations of benchmarks, as we have previously covered in Chartpack: (Mis)measuring AI. The performance of LLMs is often inadequately represented in benchmarks due to their wide-ranging use cases, leading to abstraction from the actual qualia of using these models. We will have to wait for Gemini Ultra’s release to properly judge it.

Their second marketing trick was in their announcement video. They presented a video demo, which made Gemini look like some miraculous real-time assistant. However, it turns out the model response time was sped up, it was not done in real-time, and there was some complex prompting done on the backend. I can’t help but think this is a sign that Google feels threatened, and needs to create a better appearance than reality.

This contrasts with OpenAI’s “low key research preview” that was ChatGPT’s release. In fairness, the game has changed since then. One thing is for certain, with the competition hotting up no tech firm can afford to sit still, whether or not we see models much better than Gemini Ultra or GPT-4 in the next few months.

See also my commentary from earlier this year:

💥 Google and the disruptive innovator

Google, the firm that has done more than any other in “organising the world’s information and making it universally available and accessible for all” might face the classic “innovator’s dilemma”.

Key reads

The new economic elite. This year marks the first recorded instance where inheritance, not entrepreneurship, has been the primary source of wealth for the majority of new billionaires since UBS, a bank, started reporting on it 9 years ago. They expect this to become a trend over the next 20 years, with 1000 billionaires passing an estimated $5.2 trillion onto their offspring. The report has the usual claptrap about continuing family legacies, but what it’s really about is how elites continue to accumulate wealth.

We are in an economic period where market power consolidates in the hands of a few. The network effects of tech companies have already shown their ability to extract rents (e.g. 30% of revenue from the App Store). And they are looking to do the same for AI. Max von Thun, a Director at the Open Markets Institute, an anti-monopoly think-tank, also warns about this. He says laws pay too much attention to how AI is used wrongly, and not enough to the market’s monopoly-like setup. We must proactively intervene to prevent this new monster from forming, and introduce reforms to dismantle the new plutocracy.

See also:

Market power & greedflation. Since 2019, during a period of high inflation, total profits across publicly listed companies in the UK, the US and Germany have increased by between 32% and 44%. In the UK, 90% of this profit increase was due to only 11% of publicly listed firms.

Can we trust AI? Bruce Schneier’s essay on trust examines the distinction between interpersonal trust (based on personal connections and moral judgments) and social trust (relying on system reliability and predictability) in relation to AI. He argues that as AI systems increasingly mimic human interactions, there’s a risk of misplacing interpersonal trust in these systems, which are controlled by organisations with specific agendas. The clear solution is to make sure no single organisation controls AI systems. The new AI Alliance for open-source is a step in the right direction, helping cultivate social trust in AI.

See also:

For an excellent example of interpersonal trust, and how to lose it, check out former OpenAI board member Helen Toner’s interview with the WSJ, discussing the circumstances of Sam Altman’s firing.

Future gains? You can scale large language models in three ways: by adding more parameters, using more training data, or extending training time. One solution to increasing training data is using synthetic data. For a breakdown of how this works, check out

post on the topic. It enables the creation of diverse datasets essential for training AI models, particularly in scenarios where real-world data is scarce or sensitive. (A recent impressive example I witnessed the use of synthetic data successfully is that of Wayve, the autonomous driving startup.)Increasing training time means increasing the amount of compute. This week, AMD released the MI300X, a competitor to NVIDIA’s H100. For a detailed breakdown of its specs, see

’s report. Given that no one can afford to sit still, one thing is for certain, as Pierre Ferragu from New Street Research argues, “demand for compute is not abating anytime soon”.See also:

Some advice from Ryan Roslanksy, CEO of LinkedIn on talent management in the age of AI.

Animate everyone, an image to video synthesis for character animation. AI has finally come to automate TikTok dancers.

Apple released an AI framework to build foundational models.

UAE’s G42, which recently partnered with OpenAI, plans to stop using Chinese hardware to ease US fears of tech leaks.

Market data

Meta and Microsoft are estimated to be the largest purchasers of NVIDIA’s H100 GPUs, buying 150k each, followed by Google, Amazon, Oracle and Tencent who purchased 50k each. For a primer on the NVIDIA advantage, see our Chartpack.

IBM launched a groundbreaking 1,121-qubit quantum chip, beating its own record of 433 qubits.

3% of the world’s cars are now electric. This is great! The EV fleet has grown fourfold since 2020, and it’s set to grow even faster.

A study showed people would pay $28 and $10 to quit TikTok and Instagram, or to make others quit. But they feel stuck because their friends are still using these apps. They’d need to receive $59 and $47 to leave TikTok and Instagram if their friends keep using them. Maybe there’s something to Al Gore’s comments: “These algorithms are the digital equivalent of AR-15s, they ought to be banned.”

Using AI to generate an image uses the same amount of energy as charging your phone.

Short morsels to appear smart at dinner parties

☄️ Japan inaugurated the world’s biggest experimental fusion reactor.

🏭 Are industrial processes the winning application for the metaverse?

🤖 Anthrobots: tiny human-cell robots that can mend neuronal damage.

🧬 All I want for Christmas is… A $1,000 DNA biomemory card.

🏺 The world depends on a 1959 code that hardly anyone knows anymore. (AI can help.)

💥 The forgotten history of raves.

🎓 Pandemic-era grade inflation remains across Ivy-league universities.

🐋 Wales linguistics: We have found the equivalent of human vowels.

End note

The EU passed its AI Act at the end of the week, its mandarins celebrating this landmark with what can only be described as glee. The act provides some protections for citizens, especially from facial recognition, but the real question is whether the approach to large AI models of the type that have burst on the scene are appropriate or not. In my view, the carve-outs in the act for open-source are healthy. Concentration of power remains a substantial risk from AI. Open-source is an antidote. Getting it done this week reduces the chance of bigtech to load it down to their favour as they did with GDPR. Although I rather doubt Thierry Breton’s assertion that the Act would be a launchpad for EU startups.

We can’t avoid the reality that the technology is also moving really rapidly. It’s becoming increasingly clear that GPT-3.5-capable models are getting smaller and smaller — think laptop size this year and phone size next. These are plenty good enough to deliver real applications (both good and bad ones). Techniques like 2-bit and 4-bit quantization, which shrink models and let them run on cheaper hardware, are proving to be effective. New architectures like state-space models may perform better than transformers, which underpin today’s LLMs, especially on longer documents. French AI firm, Mistral, dropped a new open-source model that uses Mixture of Experts (reputedly the technique used in GPT-4) on BitTorrent. Anyone can access and modify this model with reasonabl-ish hardware.

Finding that balancing between innovation and regulation that supports social outcomes (which may include requiring more innovation) is going to be tough. Will the implementation and enforcement allow for the kind of continuous review and adaptation that this environment calls for?

Happy to discuss in the comments below,

Azeem

What you’re up to — community updates

Marko Balabanovic is looking for a Head of Regulatory Affairs at Our Future of Health in London.

- writes about the potential impact of the influx of GenAI content on media and culture.

Luna Lacey, Chanuki Seresinhe and Robbie Stamp are co-hosting the next installment of the embodiment series focusing on what it means to be human in the dataspace on 10 January.

Gustav Stromfelt is hosting a series of meetups exploring the food-system of the future.

- shares her notes of my fireside chat this week with Faruk Eczacıbaşı.

- writes about the ‘antidebate’ and the conversational nature of reality.

- ’s Democracy Next launched a new Tech-Enhanced Citizens’ Assemblies Pop-up Lab with the MIT Center for Constructive Communication

Share your updates with EV readers by telling us what you’re up to here.

Benchmarks

Bruce Schneier’s article on “AI and Trust” should be a must read. This includes the observation of the EU’s critical mistake in trying to “regulate the AIs and not the humans behind them.” I gave a very similar message to a recent UK All Party Parliamentary Group for Cyber Security.

In the discussion about speed, safety, etc. this is probably the most useful sentence I've seen:

"We must proactively intervene to prevent this new monster from forming, and introduce reforms to dismantle the new plutocracy."