📈 Will AI spending come down to earth?

Preparing for take off

Is it rational expectations or irrational exuberance? There is an AI boom — and as AI is famished for compute, chipmakers — the providers of that compute — are having a heyday.

Nvidia’s share price is up 200-fold in a decade. Sales in the past year increased 265%. Lisa Su, the boss of rival AMD, reckons the total addressable market for AI chips is $400bn. Even ARM, which has virtually no presence in the AI chip market, saw its share price double this year. This explosive growth inevitably draws comparisons to the dotcom bubble.

But let’s work our way back. What would need to be true for these valuations and the market’s expectation of future cashflows, to be met? After all, at some point, reality bites.

Building the flight path

Nvidia is valued at roughly $2 trillion on $60bn of revenue and $35-ish billion of annual profits last year, although the last quarter showed 265% yearly revenue growth! That gives it a spicy price-to-earnings ratio of 66 or so.1 That is substantially above an already inflated 10-year average of 49. The S&P 500’s PE ratio has been below 20 for most of the past 100 years.

One way to understand this is to approach it from Lisa Su’s reckoning that the market for AI chips will be $400bn by 2027. Pierre Ferragu at NewStreet Research looked at this question (see here for my podcast with him). He estimates that in 2023 the AI chip market was $45bn, implying a 70% annual growth rate to hit Su’s $400bn TAM.

Of course, AI chips are just the tip of the iceberg. An AI server has other gubbins, like memory, network cards, storage, power supplies, and the physical units that contain them. These servers need to be interconnected with each other using demanding high-bandwidth networking. And the whole lot needs to be wrapped up in a data centre with its power and cooling needs. That additional infrastructure adds another $400bn to the bill. So Su’s forecast implies $800bn in AI infrastructure in 2027.

Who will pay for it? How do we get to $800bn of additional revenues?2 Ferragu reckons it is possible, based on three potential line items:

An acceleration in online ad growth because of generative tools. Ferragu posits a potential increase of $500bn by 2027. I dug a bit deeper myself on this: imagine, for example, using generative AI to create more ad copy against which optimisations can be run to drive performance. Google already does something like this with its Performance Max product. Online advertising raked in $600bn in 2023 and was growing at roughly 11% per year. If generative AI increased this some 10% points, it would add an incremental $400bn in revenues by 2027.

New medical treatments powered by generative AI. Generative AI promises to accelerate drug discovery, speeding up research and approval timelines, accounting for $100-200bn in revenues.

New AI agents. These are wide-ranging use cases from using co-pilots to code to having a ChatGPT premium subscription — together also accounting for $100-200bn in revenues.

The big question is how far AI agents can take us. In August 2023, OpenAI had between 1-2 million paid subscribers. Since then, their annualised revenue has doubled, suggesting they now have roughly 2-4 million subscribers. The iPhone sold 11.63 million units in its second year — ChatGPT subscription sales are impressive, but not by a lot. Unlike the iPhone, whose appeal was immediate and functionality obvious, ChatGPT’s usefulness, while essential to some (I include myself in this group), remains less clear to others.

And it’s helpful that I am not using it as a consumer; I’m using it for work. The growth likely won’t come from ChatGPT (its growth looks to be stalling) and its competitors alone. It will come from enterprise applications. These will put huge workload demands if companies can successfully integrate them. Right now, what’s happening is that you’ve got apps running for 100 employees in a company of 50,000. Once that reaches all 50,000, costs are going to go up. Once we go from a handful of firms to thousands and then millions using applications built on generative AI, the market will have expanded still further.

Current usage of generative AI at work is 28%. If companies institutionalise generative AI, then this figure could easily increase towards 100%. Currently, many workers are utilising the technology in their workplaces without much guidance; formal training would likely escalate the use, and as more advanced and user-friendly applications emerge, their utilisation is expected to become more effortless.

A turbulent ride?

Yet some headwinds could dampen this take-off.

First, inference costs are being driven down by third parties like Grok and Together.ai (as highlighted in EV#462). How much will we spend on AI chips if inference gets cheaper than expected?

ASICs and custom development by hyperscalers could put a dent in Nvidia’s advantage. Most of the costs lie in the inference stage. As hyperscalers increasingly design their own specialised chips optimised for inference, they reduce their reliance on general-purpose AI hardware from external suppliers. This is what Google did with their TPUs.

There is always a risk that enterprise adoption ends up being slower than expected. Perhaps it is harder than we expect to deploy reliable applications across incumbents. It might be that despite enterprise interest those problems get solved by startups who then need to sell into enterprises.

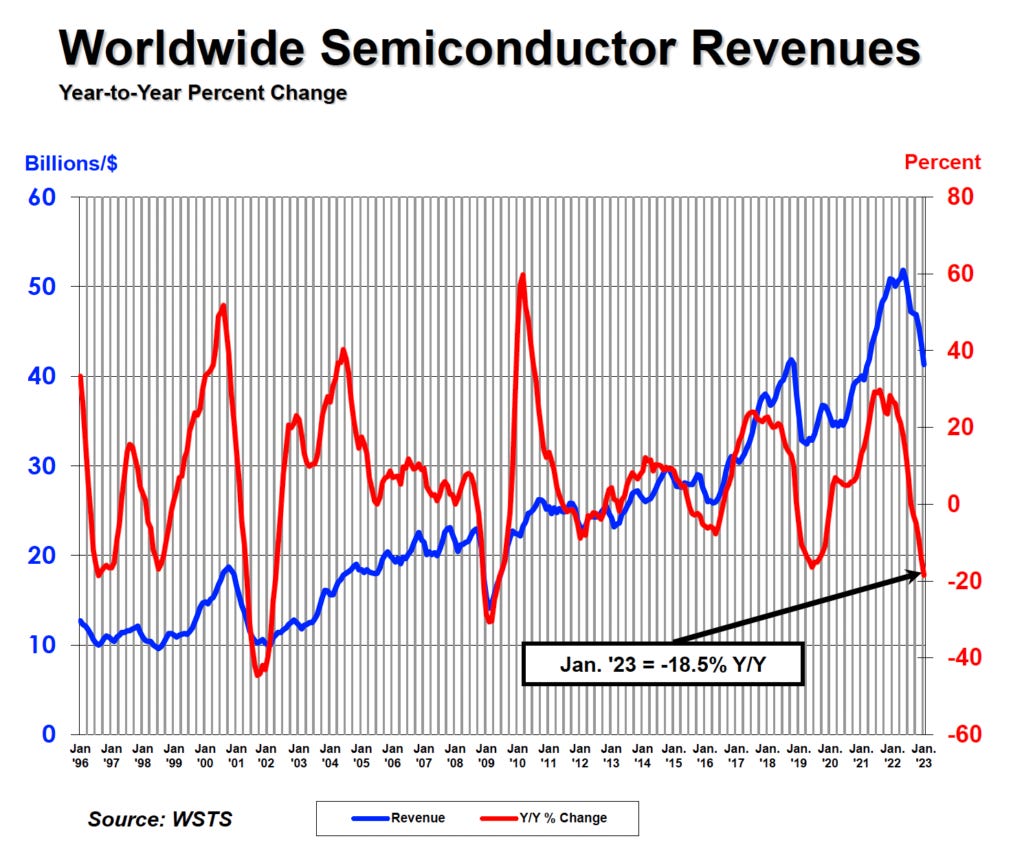

And this is all occurring in an incredibly cyclical industry. The complicated supply chain of semiconductors results in long lead times, and as magnificent as chip manufacturing is, they haven’t developed the ability to be Nostradamus yet.3 This has resulted in an industry ranging from 50% to -10% in the space of a few years. This is the industry’s own clock-cycle.

The seatbelt sign is only temporary

Fortunately, history provides us with some evidence of long-term tailwinds.