🔮 The cheat codes of technological progress

How a handful of laws help predict which technologies will dominate

Physics has laws. Gravity doesn’t care about your business model. But “technological laws” like Moore’s Law are really just observed patterns — and patterns can break.

That said, dismissing them entirely would be a mistake. These patterns have held up remarkably well across decades and understanding them helps explain some of the most important economic shifts of our time. Why did solar power suddenly become cheap? Why can your phone do things that supercomputers couldn’t do 20 years ago? Why does everything seem to be getting faster, smaller and cheaper all at once?

The answer lies in these technological regularities — Moore’s Law, Wright’s Law, LLM scaling laws and more than a dozen others that most people have never heard of. They’re not iron-clad rules of the universe, but they’re not random either. They emerge from the fundamental economics of learning, scale and competition.

Today we have a slow, special weekend edition for you about what important technology “laws” actually tell us, why they work when they work and what happens when they don’t.

Whether you’re trying to understand the AI boom, the energy transition, or just why your laptop doesn’t suck as much as it used to, these patterns are your best guide to making sense of our accelerating world.

We prepared a scorecard to help you evaluate these technological laws based on their empirical basis, predictive accuracy, longevity, social and market influence, and theoretical robustness. This is only a snippet—for the full scorecard covering seventeen laws, read on!

The core of computing

Most readers know about Moore’s Law, coined by Intel co-founder Gordon Moore in the 1965 when he was asked to predict what would happen in silicon components over 10 years. He predicted that transistor counts would double roughly every two years, driving exponential leaps in computing power.

Yet each anticipated limit, whether from physics or economics, has sparked new waves of innovation such as GPUs, 3D chip stacking and quantum architectures. Moore’s Law was never a single forever-exponential; it is better viewed as a relay race of logistic technology waves. The baton is still being passed –just more slowly, and with ever-higher stakes – so the graph still looks exponential even though the underlying sprint has become a succession of S-curves.

For decades, making computer chips smaller let engineers simply turn up the speed without using extra power – a trend called Dennard Scaling, which worked hand-in-hand with Moore’s Law. But physics pushed back. Smaller parts started to overheat and by the early 2000s manufacturers hit a ceiling: raising the clock speed any higher would melt the chip. The solution was to add more “brains” (cores) that share the work instead of asking one core to sprint faster and hotter. That shift to multi-core processors, explored in detail here, let performance keep climbing without turning your laptop into a space heater.

Still, innovations continued, reaffirming the adaptability of these foundational laws.

Collateral exponentials: storage, LEDs and solar

Raw computing muscle doesn’t matter if you can’t afford a place to keep all the data it creates. That’s where Kryder’s Law comes in: the cost of storing information has fallen so fast that we’ve gone from guarding a few megabytes on floppy disks to tossing terabytes into the cloud for pocket change.

Cheap, roomy drives unlocked everything from YouTube and Netflix to the photos filling your phone. But the parts we use today—magnetic platters and flash chips—are bumping up against hard physical limits. New ideas, like packing data into strands of DNA, could be a thousand times denser than the best solid-state drives, potentially restarting the price-drop roller-coaster.

If that sounds familiar, it should. Just as Moore’s Law kept shrinking transistors, storage has advanced in overlapping waves: fresh materials, clever techniques, better manufacturing. Up close each wave looks like an S-curve that flattens out, but zoom out and the combined effect is a long, steady surge that keeps powering the next frontier.

Similarly, Haitz’s Law has done for LEDs what Moore’s did for microchips.

Every few years, LEDs get brighter for the same amount of electricity while their price per lumen keeps falling. It’s the result of a thousand tweaks: better semiconductor materials, smarter chip designs, improved heat-sinking, and streamlined factory lines.

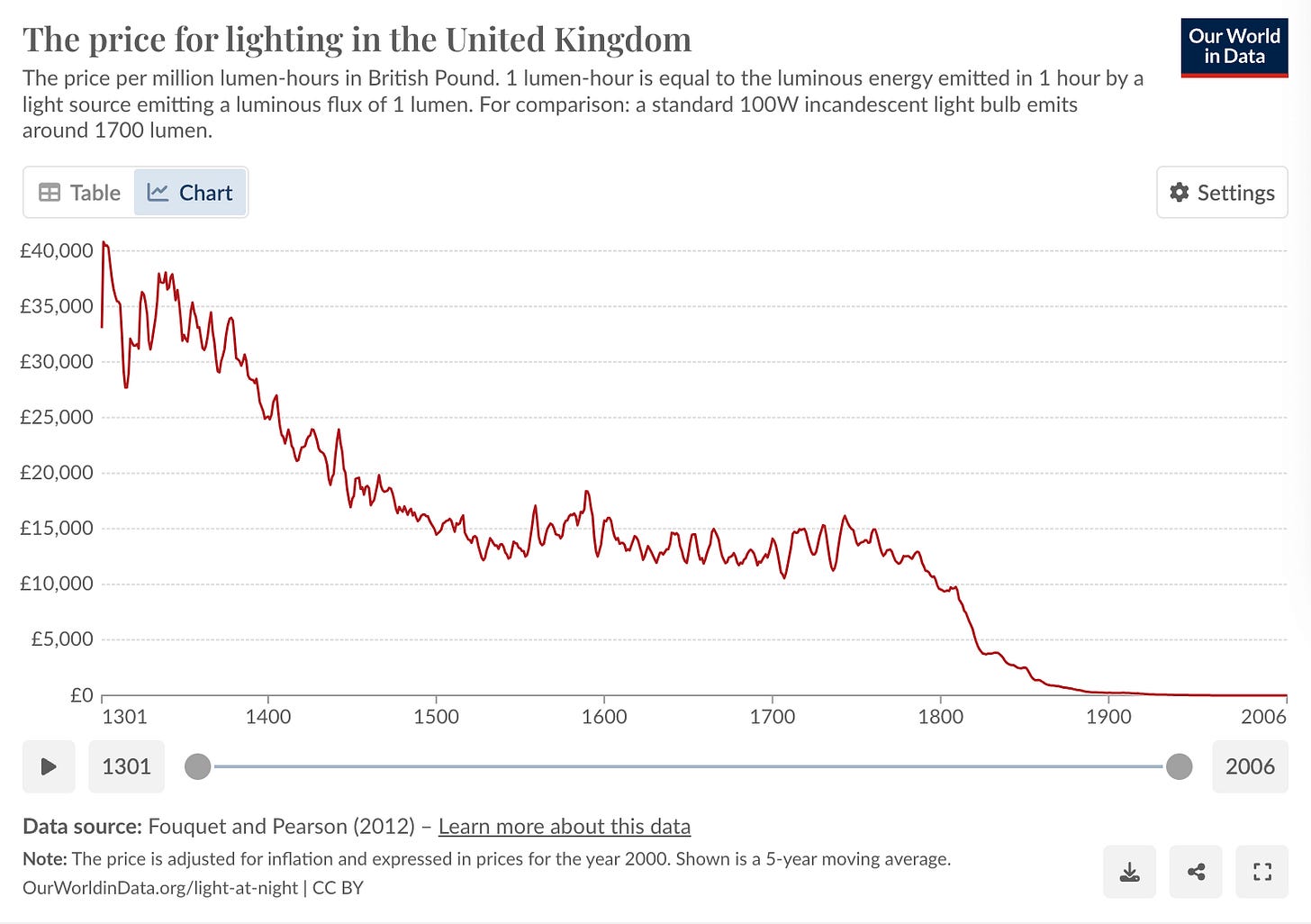

For those interested in a longer historical lens, the cost of lighting has plummeted by orders of magnitude since the 14th century, when tallow candles made with cow or sheep fat provided meager illumination at a high price.

Gas lamps in the 19th century and Edison’s incandescent bulbs in the 20th were both significant leaps, yet neither revolution can match the accelerating pace of modern LEDs.

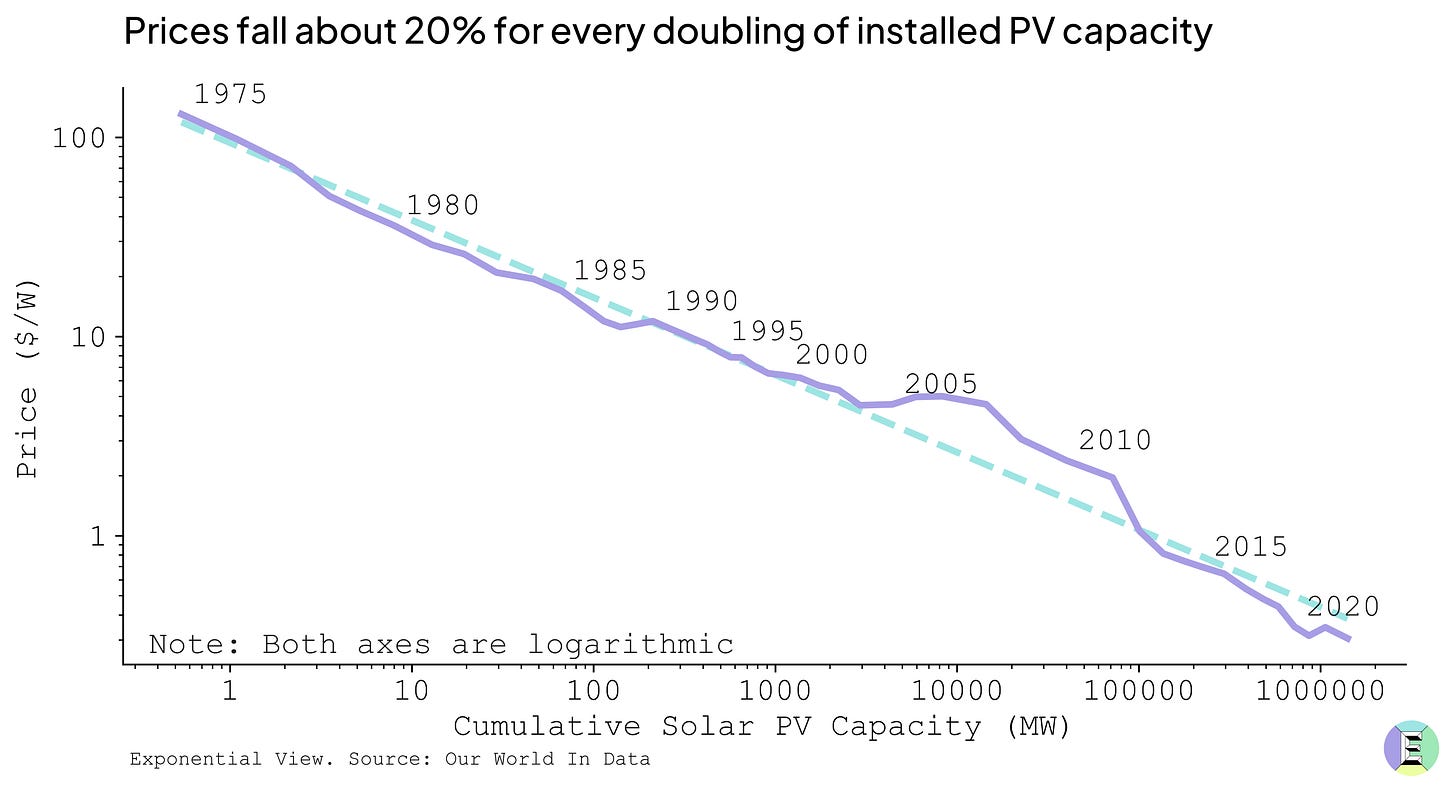

Swanson’s Law is the solar-power version of a volume discount because each time the world’s total solar capacity doubles, the price of a panel drops by roughly 20%. Over a few short decades those repeated cuts have worked like compound interest in reverse – turning solar from an expensive science-fair project into, in many places, the cheapest way on Earth to make electricity. It’s a textbook example of how steady, exponential cost declines can flip a market on its head.

Connectivity is unstoppable

Connectivity has had a profound impact on all our lives and there’s a set of laws that can tell us what’s going on. Nielsen’s Law is the “speed limit” that keeps jumping. Top-tier internet connections get about 50% faster every year, a journey that has taken us from the screech of dial-up to whisper-quiet gigabit fiber and made streaming, cloud gaming and video calls feel effortless.

Edholm’s Law says the walls between wired, wireless, and optical links keep crumbling. Today’s Wi-Fi 6 and Wi-Fi 7 routers pump out gigabit-class speeds that used to require an Ethernet cable, showing how quickly those once-separate lanes are merging.

Throw in Gilder’s Law1 (which boldly claimed total bandwidth in telecom networks might triple yearly) and Butters’ Law (the cost of transmitting a bit over optical networks halves every nine months), and you get a picture of nearly unstoppable expansions in capacity and falling network costs.

Even the airwaves have their own rule: Cooper’s Law, courtesy of cell phone pioneer Martin Cooper, states that the number of simultaneous wireless calls in a certain spectrum doubles about every 30 months.

In 1901, when Guglielmo Marconi first transmitted Morse code across the Atlantic, the technology was so primitive that his signal used a significant fraction of the world’s radio spectrum. Today, more than one trillion radio signals can be simultaneously sent without interfering with each other.

That’s why we can cram more and more people into the same frequencies without meltdown – assuming we keep improving our spectral efficiency with tech like MIMO, 5G or even future 6G. If Cooper’s Law continues at its current pace, by 2070, each person on Earth could theoretically use the entire radio spectrum without interfering with anyone else’s signals. Of course, practical deployments must still respect physical and regulatory limits, but the trend of maximizing capacity continues to hold.

This is great news for networks themselves. Metcalfe’s Law suggests that the value of a network is proportional to the square of the number of users. This was the core of social media growth – each new user exponentially increased the connections and value of the network itself.

It has also been applied to make bitcoin and enterprise valuations. This is a reminder that many of these laws matter most when enough people adopt the technology. In other words, scaling is crucial to turn a neat idea into a major change.