🤔 What bosses miss about AI

Imagination.

At his desk, Nicholas Carlini, a senior research scientist at DeepMind, is chatting with a language model. “Convert this program to Rust,” he types. Within seconds, the AI responds with a functional Rust program. Nicholas reviews the code, asks for some optimisations, and shortly he has a version of a program that “improves performance 10-100x”. While the AI can’t replace his expertise, it has changed what he gets to spend time doing (here he shared a list of 50 conversations he’s had with AI that improved his work).

Similarly, an EV member with several years of experience growing one of the world’s largest internet services wrote in our Slack community:

using claude 3.5 for a coding project for the first time

omg it’s so good

it has actual intelligence about the situation I’m using it for! and generates good code! and thinks deeply about context I may not have thought of!

And another member shared:

I used Claude 3.5 to build a working simulation of brownian motion in about an hour. I was mind blown. It was able to code seriously nuanced changes (weight/size/location of particles) and would have after that time been a genuinely useful sim for teaching physics.

It took around 8 iterations & ~ 60 mins

It did require my knowledge of what the sim should be doing in order to ‘bug fix’ it as it responded as if it had fixed things (eg. conservation of momentum) when it hadn’t

It took persuasion to get it to produce the code

Let me take you to another scenario—it’s a composite of things I’ve heard as I’ve talked to senior teams and things I’ve read. Picture a corporate meeting room, Sarah, a senior executive at a Fortune 500 company, is also talking about AI. But her conversation is different. “We’ve implemented AI in our customer service department,” she tells her board. “We’re projecting a 15% reduction in call centre costs next quarter.” The board members nod approvingly, but there’s a sense that they were expecting... more1.

This contrast illustrates a crucial (read: costly) misunderstanding of how businesses are approaching AI.

The limbo

As I’ve spent time with boards and management teams this year, I’ve spoken to dozens of C-level bosses in the US, UK, and Europe across various sectors, from FMCG to pharma to finance. While there are some exceptions, in general, what people are seeing are interesting projects that are quite powerful, but not truly transformative.

When I speak with companies, I talk about the three levels of working with a new general-purpose technology, of which AI is likely one.

Level 1: Do what we do cheaper. This is where most companies start. They use AI to automate routine tasks – chatbots for customer service, AI-powered translation services, and automated data entry. It’s the low-hanging fruit of AI adoption, what VC investor Tom Tunguz calls “toil”.

Level 2: Do what we do, just do it better. As companies grow more comfortable with AI, they start to see opportunities for qualitative improvements. A major investment bank, for instance, recently used AI to automate much of its unit test coverage. This reduced costs and allowed for more comprehensive testing, improving overall software quality.

Level 3: Do entirely new things. This is where the true potential of AI begins to show and it’s where Nicholas is operating. He’s not just doing his job faster; he’s doing things that were previously impossible.

But here’s the rub: most businesses are stuck at Level 1 or Level 2. They’re using AI to shave costs or incrementally improve processes, missing the opportunity to strategically rethink what their business could look like and how they approach the rethinking itself!

Why?

There are hidden assumptions in organisations and processes we already have. These assumptions about processes are based on things like:

The collective intelligence of the organisation

The speed with which it can make decisions

The types of decisions that can be made at each level of the organisation

These assumptions have been baked into the processes developed over the years.

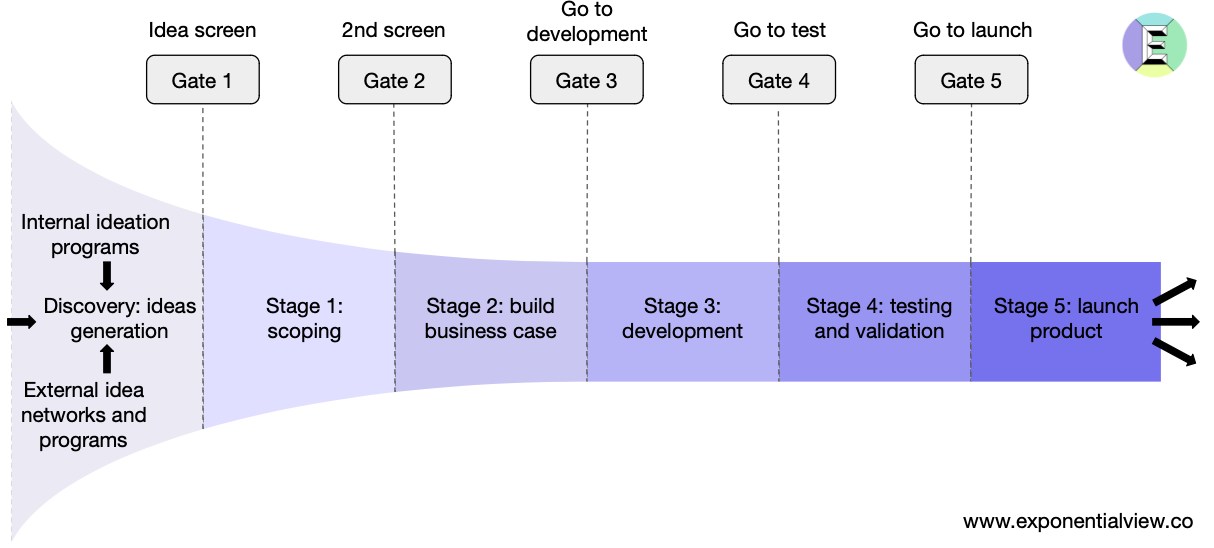

Let me give you a simple example. Imagine a new product development process, say for a consumer goods company. You generate ideas at the beginning of a funnel, then take these ideas through a series of stage-gates. They need to pass certain thresholds before proceeding to the next stage, where more resources are used.

Traditional product development processes are designed based on historical data about how many ideas typically enter the pipeline. If that rate is constant or varies by small amounts (20% or 50% a year), your processes hold. But the moment you 10x or 100x the front of that pipeline because of a new scientific tool like AlphaFold or a generative AI system, the rest of the process clogs up. Stage 1 to Stage 2 might be designed to review 100 items a quarter and pass 5% to Stage 2. But what if you have 100,000 ideas that arrive at Stage 1? Can you even evaluate all of them? Do the criteria used to pass items to Stage 2 even make sense now?

Whether it is a product development process or something else, you need to rethink what you are doing and why you are doing it. That takes time, but crucially, it takes imagination.