😵💫 The AI acceleration delirium: models, mergers, and mayhem

Welcome to artificial general confusion

What a week. The feeling isn’t just acceleration anymore; it’s delirium. You blink, and another model is released, another rumour flies. The pace is truly accelerating beyond all reasonable expectation. Welcome to artificial general confusion.

This week felt like a microcosm of the entire AI race. OpenAI dropped o3 and o4 Mini, their latest reasoning models. o3 is really quite impressive: it can use an array of tools from Python to web search and image analysis to do your bidding.

o3 has shown its mettle in my early tests. A particularly tricky one is my flight challenge. The test involves making a transatlantic booking with specific constraints (like my preference for particular planes). o3 did really well, beating all other models, but, for now, I’m still better. Another has been a multi-factor, quite complex, real-world strategic problem. o3 worked its way through the study like a master strategist: pulling out the key issues and addressing them with just enough detail.

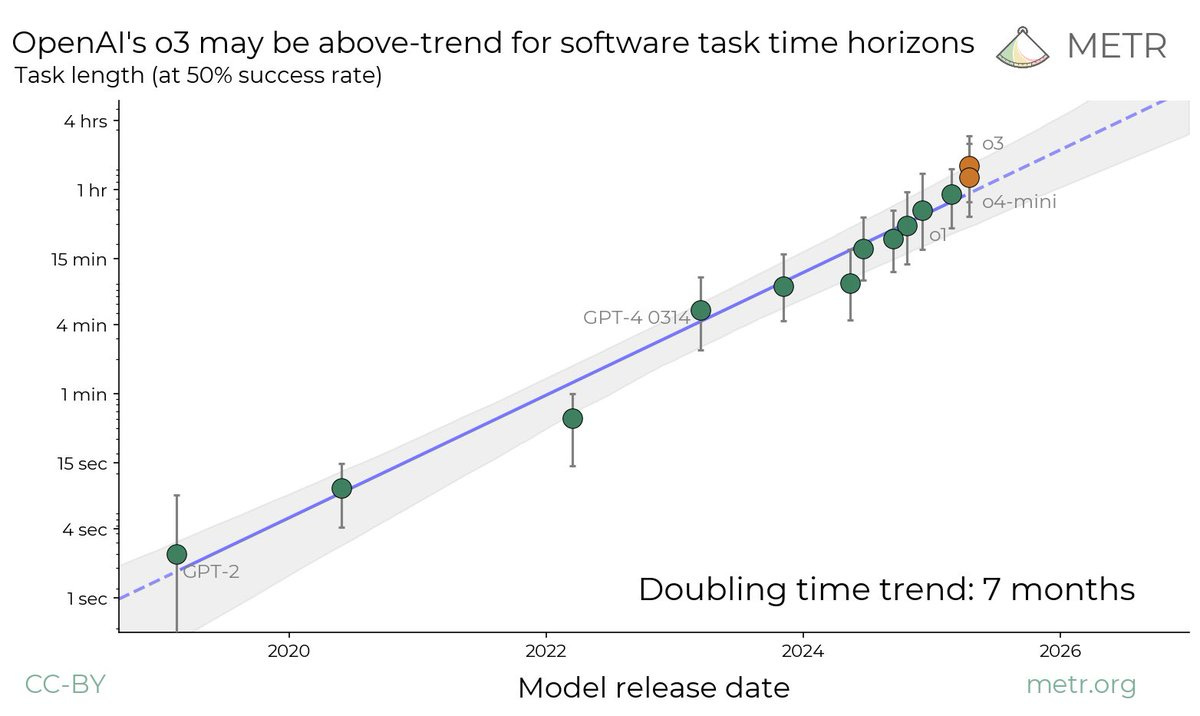

One of the more formal measures I’m tracking closely is METR’s time horizon, which tests AI’s ability to complete long tasks. Mastering long tasks is a key unlock for significant productivity enhancements.

On this o3 does not disappoint.

Benchmarks offer a glimpse but we should be cautious about simplistic readings. The true, messier reality of real-world application shows a palpable rate of improvement, even as capabilities feel like jagged edges pushed into the market.

Predictably, there was a stampede online, people breathlessly declaring this “artificial general intelligence,” as if we’ve finally tripped over some obvious finish line. It’s a line that, frankly, remains stubbornly undefined.

Within a day, Google fired back, launching Gemini 2.5 Flash. This is the faster, more efficient sibling to the 2.5 Pro model that’s become my go-to. At least on one benchmark, Google seems to be carving out a fascinating and potentially dominant space right now. Their price-performance frontier is helped, no doubt, by their hardware expertise.

Anthropic’s releases this week went rather unnoticed. Claude can now search your email, Google Drive, and calendar, although it is ponderously slow, and I use other AI tools on my email.

Then rumours started that OpenAI is considering buying Windsurf, one of those increasingly indispensable code completion tools that software engineers use to magnify their output. The purchase price? A cool $3 billion. And what is more, rumours that Sam Altman might turn the billion-user base of ChatGPT into a social network full of yeets.

How do we make sense of this four-ring circus of releases and more?

First, the pace is unsustainable for traditional product cycles. Products are being released faster than they can be properly described, product-managed, or even benchmarked in a way that’s useful to anyone outside the lab. The capabilities, while impressive, feel like jagged edges pushed out into the market. As Rohit Krishnan says: