Grading my 2024 predictions

🔥or🧊?

Hi, it’s Azeem.

A year ago, I offered a horizon scan for 2024 (part 1, 2 and 3). At the time, we were grappling with the pace of AI adoption, surging renewables, and growing friction about strategic tech. I also saw hints that advanced drugs, robotics and open-source tools would shape new opportunities.

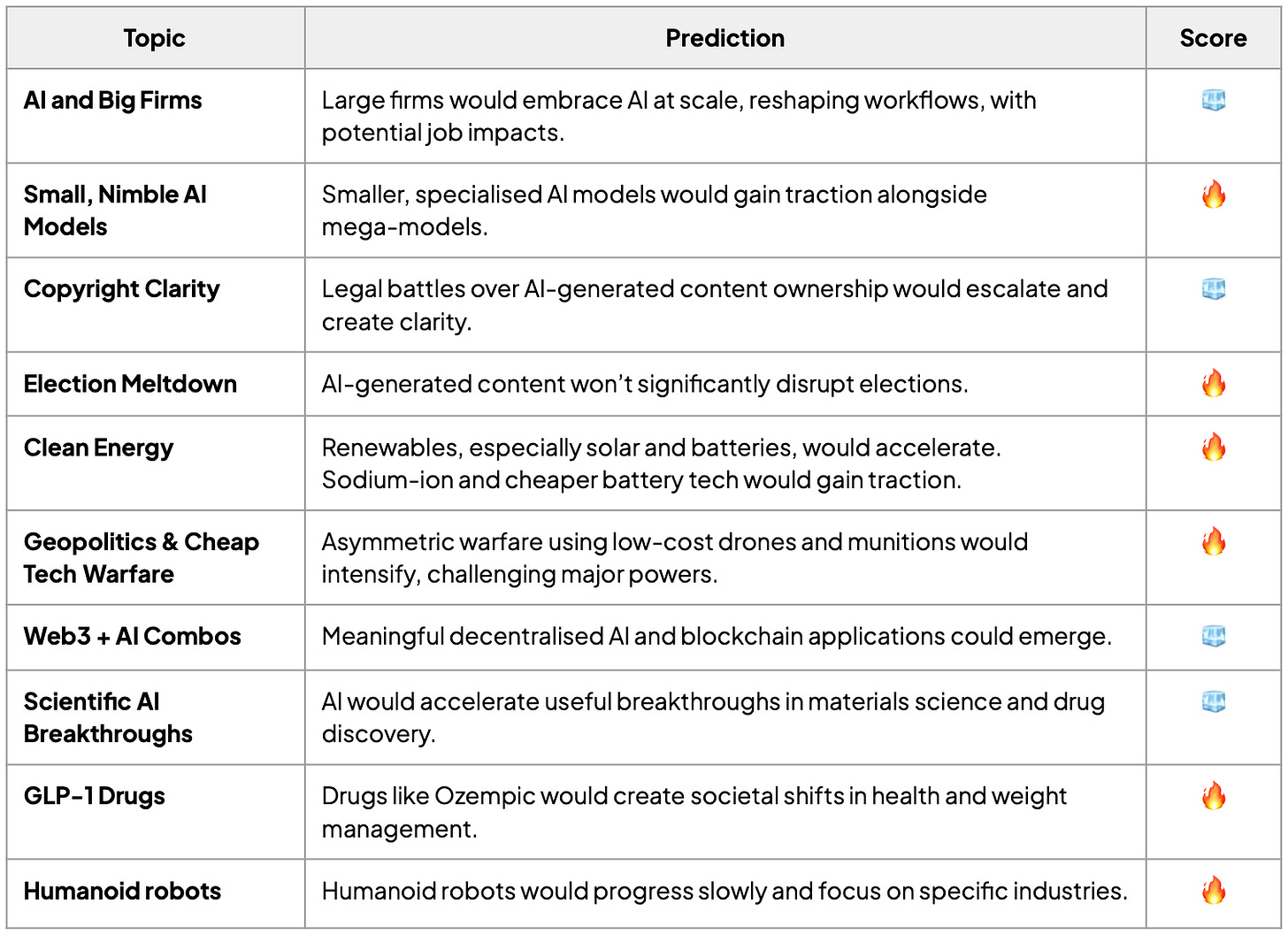

As I do each year, I reviewed this outlook, and the results are encouraging. Many of the key trends I highlighted held true, with some changes exceeding my expectations in pace or scale. A few predictions unfolded more gradually or differently than expected, but the overall trajectory was accurate. While the outlook wasn’t strictly a list of predictions, I’ll grade them as such: 🧊 for areas that evolved in unexpected ways and 🔥 for spot-on hits.

Here’s my scorecard: