🔮Existential risks’ existential problem

Does p(doom) come from anywhere other than thin air?

Will AI kill us all through malice, incompetence, or accident? This discussion is a schism in both expert and interested communities, one that often appears childish and, sometimes, as Gary Marcus points out “ad hominem”.

I want to share how I think about it, although the headline of this note somewhat gives away my conclusion.

I invite you to ask questions, share your opinions, and challenge me (and each other) respectfully in the comments.

OK, let’s get into it.

Do we face an existential risk as AI develops from today’s impressive but wobbly systems into more and more capable systems? It depends, in its simplest form, on two assertions, both of which have some logic to them:

Assertion 1. It is possible for there to be intelligent agents that are more capable than humans.

Assertion 2. If such agents are more capable than humans, then they—individually or collectively—could pose a threat to us.

In the interests of brevity, I will loosely define intelligence as the ability of an agent to evaluate its environment, make predictions about what it needs to do to operate in that environment and take actions based on those predictions. The agent should be able to learn from its actions to change its future behaviours.

That intelligence may manifest itself differently, perhaps because of other sensory inputs (echolocation, for example). Or, perhaps, because of entirely different cognitive architectures: consider an octopus’s decentralised intelligence.

The game of intelligence is some intersection between appropriately making sense of the environment an agent is in and its ability to make predictions about actions it needs to take (either changing its own inner states or effecting the outside world) such that it can make future predictions.

I’ll roughly use William MacAskill’s definition of existential risk: the risks threatening the destruction of humanity’s long-term potential. This could mean the extinction of humanity as a species and the end of the lives of all humans. But it could also mean scenarios where 10 or 100 of us survive on an island in the Pacific or in a zoo for the pleasure of an AI (or an alien species). In those scenarios, humanity’s long-term potential would be curtailed.

Assertion No. 1: The possibility of smarter-than-human agents

Let’s tackle the first assertion that there can be intelligent agents that are more capable than humans.

This assertion is hard to refute without falling into one of two extreme positions.

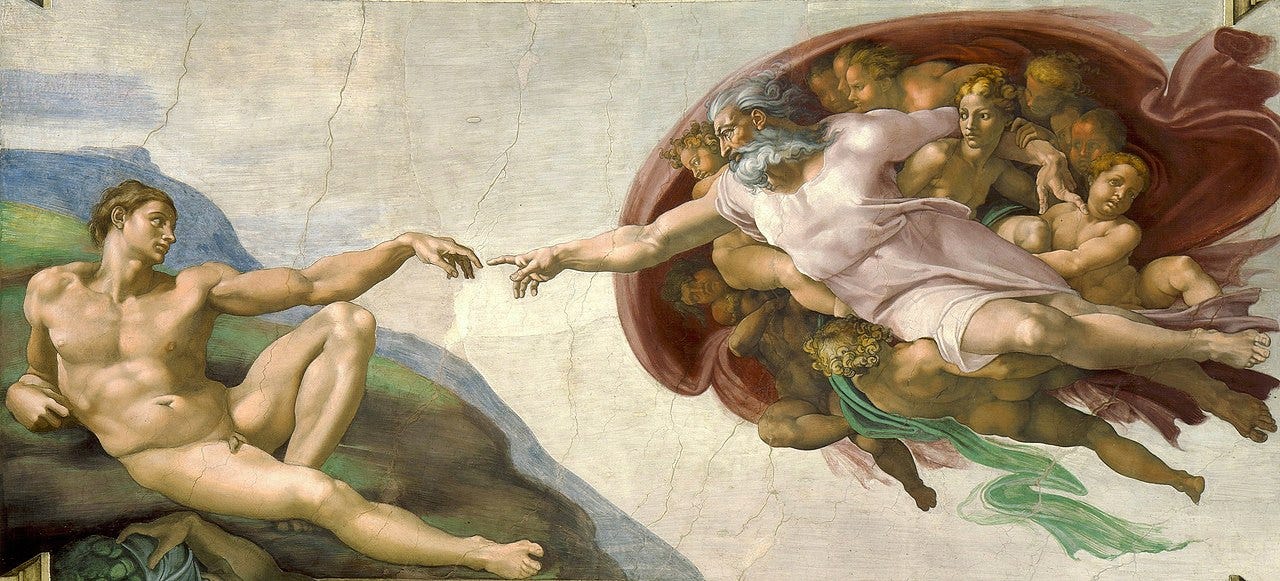

The first is a homo-centric fallacy that we, homo sapiens, are the end state of evolution. This idea is that after aeons of evolution with generally more intelligent creatures emerging (us vs primates vs early mammals vs dinosaurs vs trilobites), we’ve discovered peak intelligence: it’s us peering over the walls back into the Garden of Eden. Speciation ended with us. And nowhere in the Universe is there anything that comes close to our level of intelligence. It’s a deeply hubristic stance, and I struggle with it.

The second is a reliance on a dualist interpretation of the world. In other words, there is some inaccessible ‘spirit’ or ‘soulfulness’ that is disconnected from our physical substrates — or can only occur via our particular physical substrate, the specific biochemistries of our brains. This appeal to an elan vital, deific intervention or spirit world feels like one that gets ever more extreme with the progress of mainstream science.1

This claim — that there could be agents more intelligent than us — doesn’t say anything about the time frame by which such agents will emerge or, if they exist outside of Earth, the time frame by which they contact us.

If such agents are more capable than humans, then they could pose a threat to us.

Assertion No. 2: These agents may pose a threat to us

The second claim — that if such agents are more capable than humans, they could threaten us — also appears non-controversial. Many less capable agents threaten humans: mosquitos, the sars-cov-2 virome, rabid dogs, and crocodiles. And we’ve seen in history that more technologically advanced societies, with the advantages of cultural evolution, knowledge accumulation, and better nutrition, have suppressed less technologically advanced ones from time to time.

We’ve also seen, in many cases, more advanced or capable agents (for instance parents, teachers, pediatric doctors, and older siblings) protect, nurture and care for less capable agents. And we’ve seen many cases throughout history of more advanced groups not seeking to suppress or eliminate less technologically advanced societies.

So the “could” in “could pose a threat” is rather important. The certainty that highly capable agents “won’t cause a threat” could be seen as hopelessly optimistic. It relies on a kind of deterministic progressivism: that with intelligence comes, on average, greater moral and ethical awareness. And so, an extreme intelligence would necessarily have an extreme ethical understanding.

The opposite certainty that highly intelligent agents would be part Hernan Cortes, part Terminator, part rapacious capitalist has its own problems. The problem remains the certainty of that assumption.2

In truth, we cannot be certain in either direction.

My current state of belief is that it is possible for there to be agents more intelligent than present-day humans, and we can’t guarantee they will always act in our best interests.3 But the question is, how does that lead to an existential risk?