🔮 Exponential View #551: New frontiers for R&D; American open-source; Gemini 3 & Google's pull; breaking the thermal wall, Waymo expands, teens on marriage++

An insider's view on what matters in AI and exponential technology

Free for everyone:

Open models, closed minds: Open systems now match closed performance at a fraction of the cost. Why enterprises still pay up, and what OLMo 3 changes.

Watch: Exponential View on the split reality of AI adoption.

⚡️ For paying members:

Gemini 3: Azeem’s take on what Google’s latest model means for the industry.

Post‑human discovery: How autonomous research agents can cut through barriers that have held back disruptive breakthroughs.

AI Boom/Bubble Watch: This week’s key dislocations and movers.

Elsewhere: new chip cooling technology, biological resilience in space, AI and water use, the return of the Triple Revolution++

Plus: Access our paying members’ Slack (annual plans).

Open models, closed minds

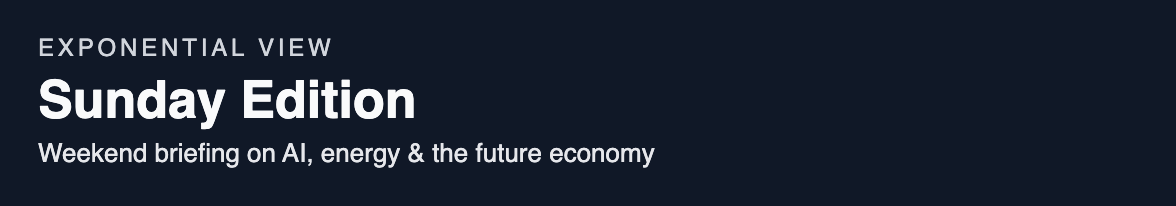

Despite open models now achieving performance parity with closed models at ~6x lower cost, closed models still command 80% of the market. Enterprises are overpaying by billions for the perceived safety and ease of closed ecosystems. If this friction were removed, the market shift to open models would unlock an estimated $24.8 billion in additional consumer savings in 2025 alone.

Enterprises pay a premium to closed providers for “batteries-included” reliability. The alternative, the “open” ecosystem, remains fragmented and legally opaque. Leading challengers like Meta’s Llama or Alibaba’s Qwen often market themselves as ‘open,’ but many releases are open-weight under restrictive licences or only partially open-source. This creates a compliance risk as companies cannot fully audit these models for copyright violations or bias. For regulated industries, such as banking or healthcare, an unauditable model in sensitive applications is a non-starter.

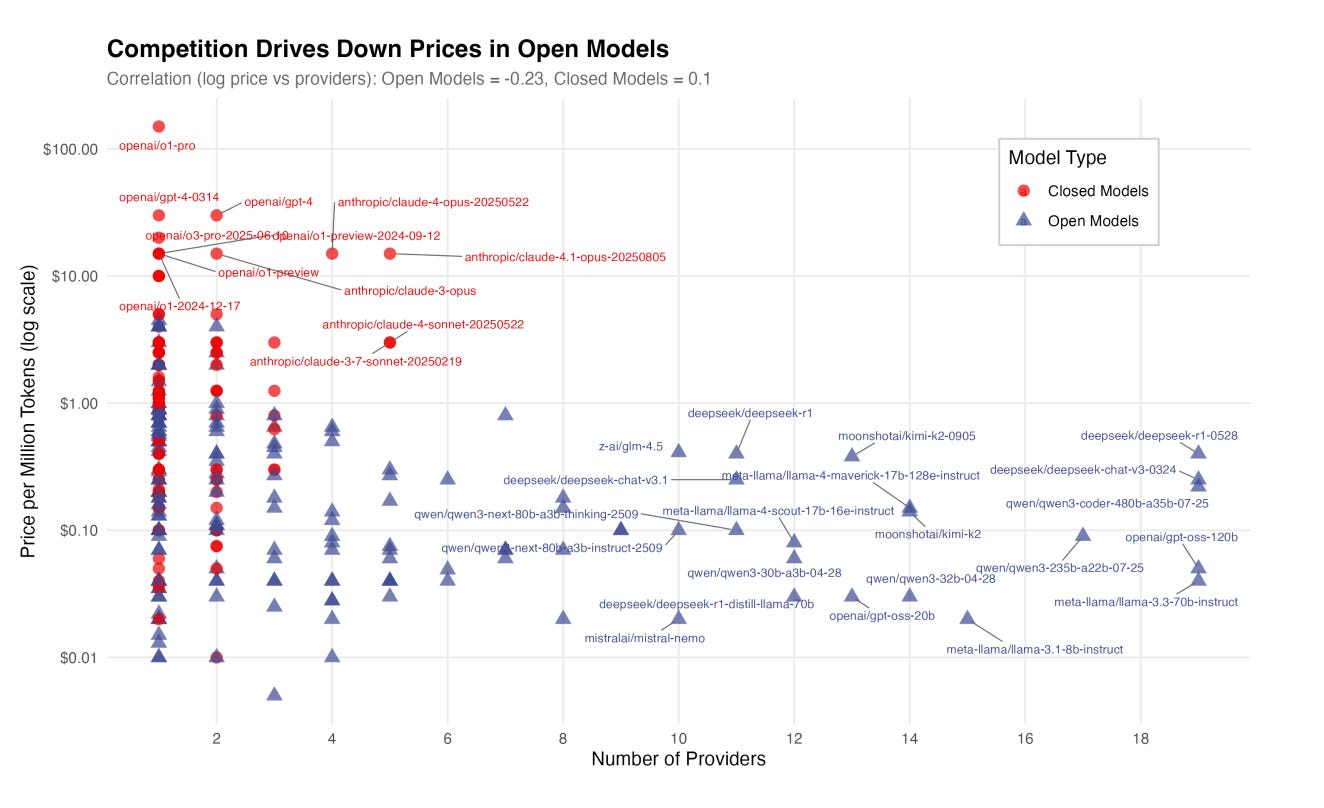

The Allen Institute for AI released OLMo 3 this week, a frontier-scale US model that approaches the performance of leading systems like Llama 3.1 while remaining fully auditable. By open‑sourcing the pipeline, OLMo 3 lowers the barrier for Western firms and researchers to build and to reduce the dependence on closed or foreign models.

Post-human science

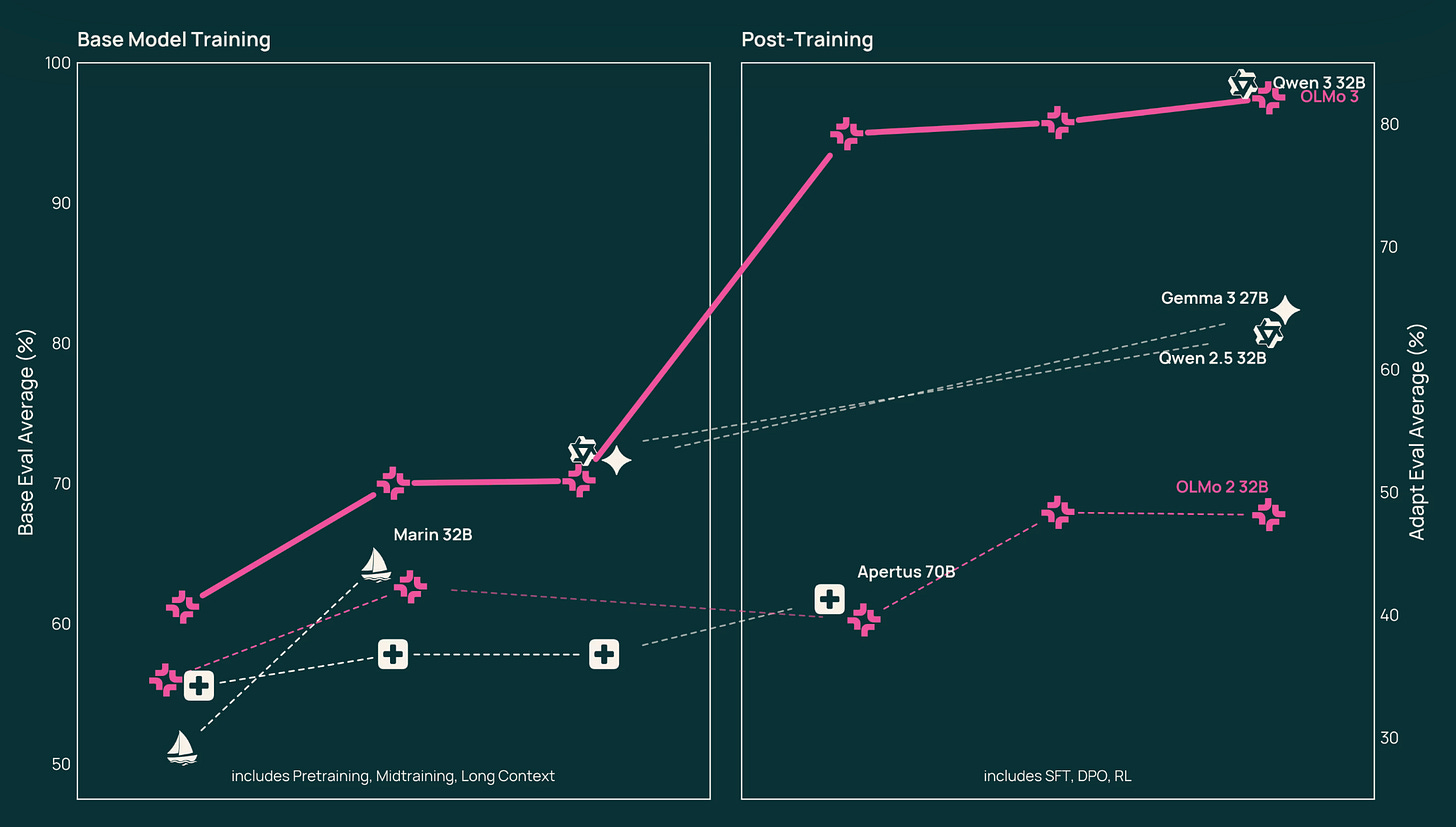

We are moving beyond AI as a productivity tool to a fundamental transformation in the architecture of discovery. This shift, marked by the arrival of autonomous agents like Locus, Kosmos, and AlphaResearch, could dismantle the sociological constraints of human science and completely change what we choose to explore.

Intology’s Locus runs a 64-hour continuous inference loop, effectively “thinking” for three days straight without losing the thread and outperforming humans on AI R&D tasks benchmarks. Kosmos’ run-time of 12 hours of agent compute can traverse a search space that would take a human PhD candidate six months.

The primary constraint on progress is sociological, not biological. The incentive architecture of modern science has stifled it. A landmark 2023 analysis in Nature of 45 million papers and 3.9 million patents found a marked, universal decline in disruptive breakthroughs across all fields over the past six decades.