🔮 The Sunday edition #514: 20¢ models v $20k AI agents; Manus; million-chip clusters, the €800bn defense pivot & trillion-dollar stimulus ++

Hi, it’s Azeem.

This week, OpenAI stakes its future on premium AI agents priced between $2,000-$20,000 monthly. But can Chinese competitors deliver comparable capabilities at a fraction of the cost? The hardware race intensifies as the tech giants each plan to build million-chip AI clusters by 2027. Meanwhile, Europe finally awakens from its defense slumber. Let’s dig in!

P.S. I am speaking at SXSW next week on AI and energy. We are also hosting a casual Exponential View meet-up.

The economics of AI agency

OpenAI plans to charge between $2,000 and $20,000 monthly for AI agents capable of high-value tasks, such as advanced coding or PhD-level research. OpenAI’s reasoning is that these agents function as digital employees with direct economic returns – not merely as tools.

This is Level 3 of OpenAI’s Stages of Artificial Intelligence, where agents autonomously handle complex tasks – an approach previewed by projects including Operator and Deep research.

But even if OpenAI’s top-tier agents outperform competitors initially, that advantage may not last – particularly given China’s aggressive investment in AI as a strategic priority. Chinese companies have already demonstrated they can train competitive AI models at a fraction of the costs of Western companies – a trend likely to extend to premium-priced AI agents. DeepSeek-R1 proved that Chinese firms can match or approach Western capabilities with fewer parameters and less compute. Just this week, Alibaba released QwQ-32B, an even smaller model delivering comparable performance at a dramatically lower price.

The stakes for OpenAI are high. To sustainably fund frontier development, they must find reliable ways to prevent competitors from undercutting their pricing. Once a new AI capability is proven possible, efficient open-source alternatives inevitably emerge, applying constant pressure on pioneers to maintain lasting competitive advantages. So, how can companies like OpenAI form a durable moat? Three potential avenues include:

Continuously scaling up to larger, more powerful models to stay ahead – similar to how premium smartphones maintain a temporary edge over budget alternatives.

Establishing “walled gardens”, so the most powerful AI capabilities remain exclusive to their own ecosystems. OpenAI currently does this with its agent products which can’t be accessed via the API.

Developing specialized AI solutions that are custom-trained for specific high-value business tasks (such as drafting legal documents or analyzing medical data) . This would be challenging for general-purpose competitors to replicate.

See also:

Do OpenAI’s agents already have a Chinese rival? I have been playing around with Manus, an agent system that can undertake complex tasks. I’ve given it some work. It is jaw-dropping. If Deep research saves the first two days of data gathering, Manus also saves you the next two days of analysis and reporting. This video is a fair reflection of what it can do.

AI agents consume hundreds, if not thousands more tokens than chatbots. We’re only just beginning to see the scale of AI demand. Broadcom’s CEO announced that the company’s three largest customers – likely Alphabet, Meta and ByteDance – each plan to build 1-million-chip AI clusters by 2027. This hardware expansion could trigger hundreds of billions in capital spending across the tech giants. As I highlighted last week, Nvidia’s Jensen Huang believes reasoning models require 100 times more compute than traditional ones – with future needs potentially millions of times higher. Clearly, the broader market agrees and for good reason.

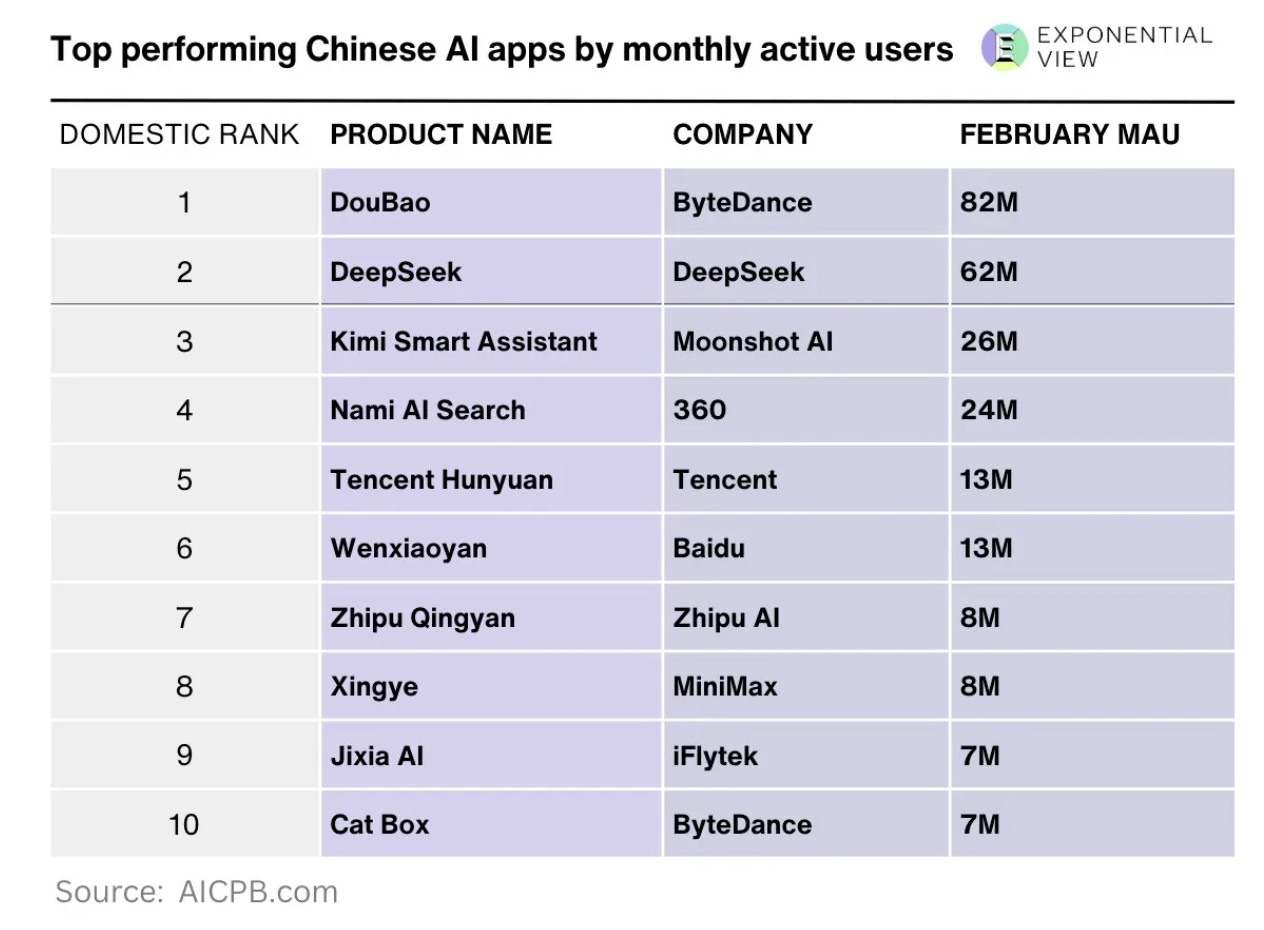

How big is China’s AI market?

Tokens are the building blocks of AI communication. As such, we can use them as proxies to understand the scale of the generative AI economy and its growth. They also help us to estimate the infrastructure necessary to sustain this growth, particularly in terms of computing resources such as GPUs and other specialized chips. I’ve looked at the data coming out of China, including the DeepSeek numbers we referenced in the Monday email, to estimate how big China’s market may be and which companies have the lead. You can read my full analysis here.

Jordan Schneider from ChinaTalk makes the case that the shift to reasoning models, which do their thinking during the inference phase, advantages the GPUs and other infrastructure available in China: “U.S. export controls aim to restrict China’s ability to train frontier AI models but overlook the growing importance of inference and China’s capacity to scale it.”

AI generating value

We’ve seen new pieces of evidence this week.

Bridgewater Associates launched a $2 billion fund last year that uses machine learning and AI to make decisions. Its performance is comparable to the firm’s human-led strategies and has developed, in CEO Nir Bar Dea’s words, a “unique alpha that is uncorrelated to what our humans do”. No details yet on the returns but we’ll keep an eye on it.