🤔 Why o3 missed what readers caught instantly

The jagged frontier of AI reveals itself when frontier models fail at basic fact-checking.

In August 1997, Microsoft Word urged a friend to replace the phrase “we will not issue a credit note” with the polar opposite – an auto‑confabulation that could have unleashed a costly promise.

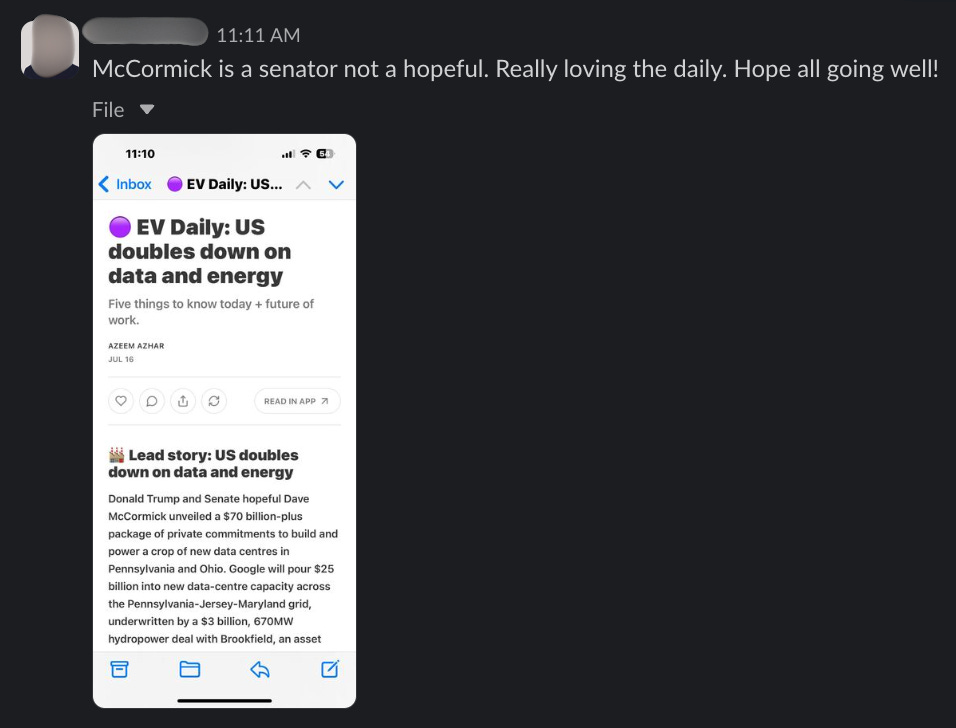

Fast‑forward to 17 July 2025: our AI-powered fact‑checker read a sentence claiming Senator Dave McCormick was a mere “hopeful candidate.” It labelled that fact as correct. Two eras, two smarter‑than‑us machines, one constant flaw: when software speaks with misplaced certainty, humans nod. Let’s unpack why.

We use an LLM-powered fact-checker to screen each edition. This fact checker uses o3 (which has access to web search) to decompose the draft into discrete claims and checks them against external sources. This systems runs alongside human checkers.

For this item, the mistake that McCormick was a Senate hopeful rather than a sitting senator slipped through.

And to be fair, the sentence didn’t seem outrageous at a glance. The central point was about the scale of US investment in clean energy – hundreds of billions in potential funding. The institutional detail – Senator or Senate candidate – seemed secondary. But that’s exactly the problem. The model, and to some extent our human reviewers, prioritized the big thematic facts and let the specifics slide.

The final catch didn’t come from an LLM. It came from eagle-eyed readers who had the context and were quick to spot the mistake.

Once we realised what had happened, we tried to diagnose the problem. We ran the section through a number of different LLMS (including o3, o3 Pro, Perplexity and Grok). None of them spotted the problem.

We refined the prompts based on feedback from the models, but the problem persisted, even when we explicitly instructed the model to verify people’s roles. Here was iteration three:

The LLM has noticed the discrepancy between our original text and its own discovery. The process continued until we found a cumbersome prompt that identified the mistake.

Weirdly, Marija Gavrilov ran the text & our most basic prompt through the Dia Browser. Dia draws from a range of different LLMs and it found the problem immediately.

In other words, the most basic tools outperformed the most advanced ones. This is a textbook illustration of AI’s jagged frontier, which describes how AI excels at some cognitive tasks while failing unexpectedly at others, with no smooth boundary between the two.

This was instructive failure. Here’s what it taught us.

1. Our hybrid human-AI workflow needs a rethink

Our current editorial process uses LLMs as a first line of review, before human editors step in. The assumption is that the models will catch the obvious mistakes and that our team will catch the subtle ones.

But this case reveals a deeper flaw: the models didn’t catch what should have been obvious because the main point of the story was America’s new industrial policy. A good human subeditor would have caught (or at least checked the claims about McCormick, who isn’t as well known as Trump.) That’s the trust trap. Silence masquerades as certainty. When an LLM returns no objections, our cognitive guard drops; we mistake the absence of alarms for evidence rather than ignorance. How do you avoid it?

Obviously, our human processes need a review, and they will become more onerous. Equally, our automated fact-checking may need to become a multi-step or parallel pipeline with different systems evaluating different classes of claims. I already do this earlier in my research process. I tend to use a couple of different LLMs to start to frame an issue, and use their points of concordance and disagreement as jumping off points for further research.