🎯 Magnitudes of intelligence

Each order of magnitude in AI usage reveals a fundamentally different phenomenon

It starts with a couple having a summer picnic, a man lies down to doze as his partner reads in the sun. The camera pulls back, timed perfectly, capturing the scene ten times, a power of ten every ten seconds. Charles and Ray Eames’ 1977 film captured the park, the city, the continent, the solar system, more.

Append a zero, and you’d change what kind of thing you were looking at. Scale does that. It isn’t merely more in number, it’s different in essence. When you add another zero, you need to think differently about the thing you once thought you understood.

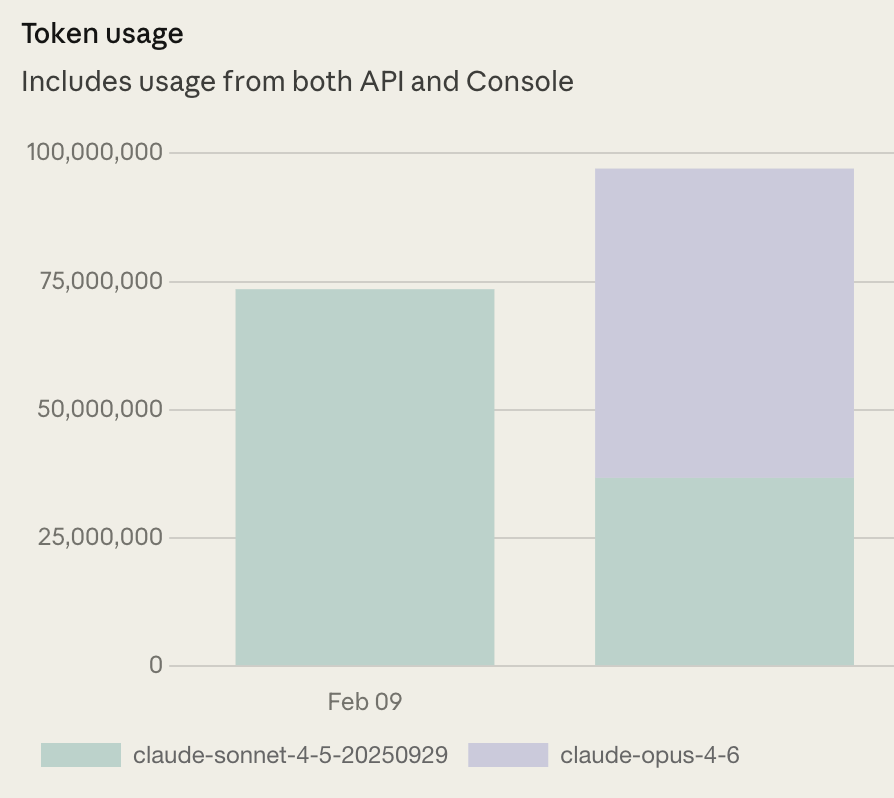

On 10 February, I consumed 97,045,322 tokens using Anthropic’s Claude API – on my own. A token is the atomic unit of today’s AI; it’s about three-quarters of a word or a tiny bit of a picture, a measure of a chunk of machine-made intelligence. Ninety-seven million is a lot of bits of words.

Most of us don’t think about token usage. We use our chatbots or coding tools, paying a monthly fee and occasionally hitting a usage cap. If you are using an app your firm built, you’ll likely never think about your tokens.

But, with tokens, as with most things, add another zero, and what you’re observing, or doing… becomes a different thing altogether. And at 97 million, it is a very different thing.

The token climb

When ChatGPT launched in November 2022, I used perhaps a thousand tokens a day. A question here, a prompt there. ChatGPT was a novelty, a gifted undergraduate who could write passable prose but couldn’t remember what you said five minutes ago. A thousand tokens is roughly 750 words. A short email’s worth of conversation with a machine. This was the picnic blanket, in the Eameses’ film, bounded, human-scale.

Add a zero.

By early 2023, GPT-4 arrived, and usage climbed to 10,000 tokens per day. This wasn’t just more of the same. GPT-4 could hold a thought. It could reason about a paragraph rather than just riffing on a sentence. I began trusting it with more real work because it consistently delivered good results. This was when I used ChatGPT to design a board game. We had zoomed out to the park.

Add another zero.

A hundred thousand tokens a day. This was the habit phase, late 2023 into 2024. The model became a daily collaborator. Research summaries, first drafts, summarising long emails, and checking contracts. On 6 June 2024, I submitted 23 queries to different chatbots, totalling 83,302 words – slightly more than 100,000 tokens. I’ve stopped thinking of each interaction and started thinking in sessions. The relationship leaves a trail. The city was now visible. My hunch is that many people are at this level, that of the city.

Add another zero.

A million tokens a day. This is where workflows enter. By the end of 2024, I began assigning repetitive, tightly scoped tasks to AI systems. They chuntered away like Stakhanovite worker bees. A script picked up my expenses from email and put them in a spreadsheet; another summarised YouTube videos or long articles, pushing them across for analysis. Granola was sitting in on most of my meetings, taking notes with increasing meticulousness. AI systems were starting to sit between me and things I needed to get done. It became second nature to fire up multiple Perplexity queries while scanning meeting notes to prepare for a call. I was slowly building the plumbing for an AI city that consumed 1 million tokens per day.

The Eameses would recognise this moment as the thing you’re looking at is no longer the same kind of thing. We had left the atmosphere.

Add another zero.

Ten million tokens a day. This was late 2025. Between Replit and Claude Code, I am writing software that actually helps me in my day-to-day. Every feature I build passes through a coding model, tokens in, tokens out. Much of this software orchestrates LLM pipelines, which, in turn, consume more tokens.

EV Prism, our reasoning and analysis backplane, talks to our research library, running automated queries nightly, chomping a couple of million tokens a day. EV Clade, a multi-agent deliberation system, uses 150,000 to 200,000 tokens per engagement, to produce exceptional critical analyses. The screenshot below shows EV Clade, which can interact with EV Prism during research activities.