🔮 Sunday edition #523: AI in 2030; the future of education; generative biology; AI moats++

An insider’s guide to AI and exponential technologies

Hi, it’s Azeem. OpenAI’s $3 billion acquisition of Windsurf isn’t about code assistants – it’s about controlling the critical feedback cycle where humans evaluate AI outputs. Meanwhile, the search paradigm is crumbling as Google searches in Safari declined for the first time in 22 years, with users flocking to conversational AI tools. The message is clear: in 2025, owning the feedback loop confers strategic advantage. Let’s dive in!

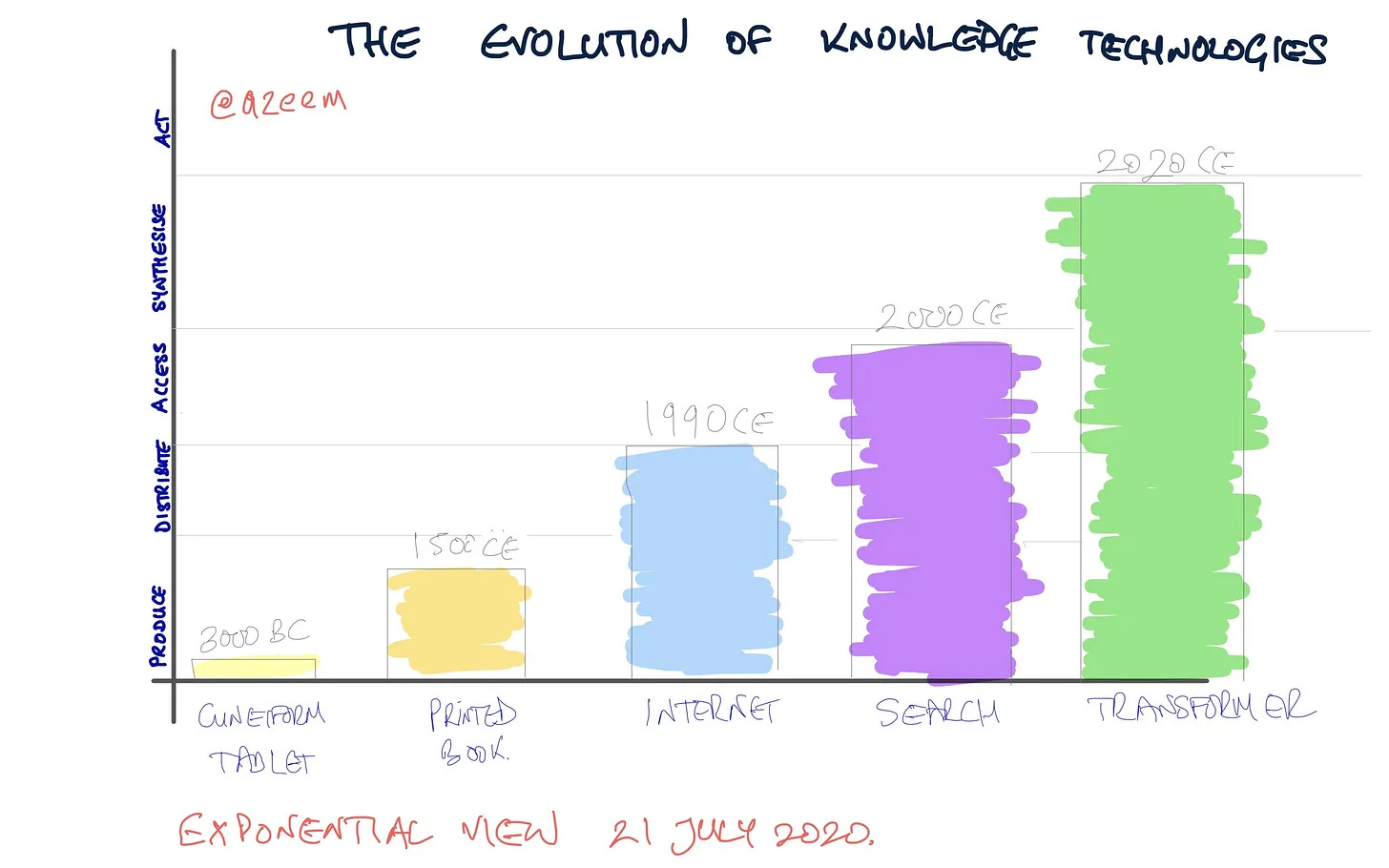

Today’s edition is brought to you by our sponsor, Eleven Labs – the complete AI Audio Developer platform. Build low-latency AI voice agents that sound human and can handle unpredictable conversations in the noisiest environments. Get started free.

AI in 2030

What does a world with AI look like in 2030? What does your daily life look like in 2030? In this week’s member commentary, I explore what a near-term future of improving AI may mean in our day-to-day.

By 2030, artificial intelligence has moved far beyond the prompt-and-response dance of its adolescence. It’s now the era of agentic AI: autonomous tool-using systems that understand, and even anticipate, you.

These AIs won’t just respond to prompts – although they will do that too – they are working in the background acting on your behalf, pursuing your goals with independence and competence.

Cheat code education

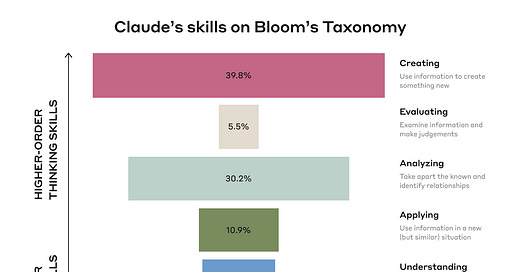

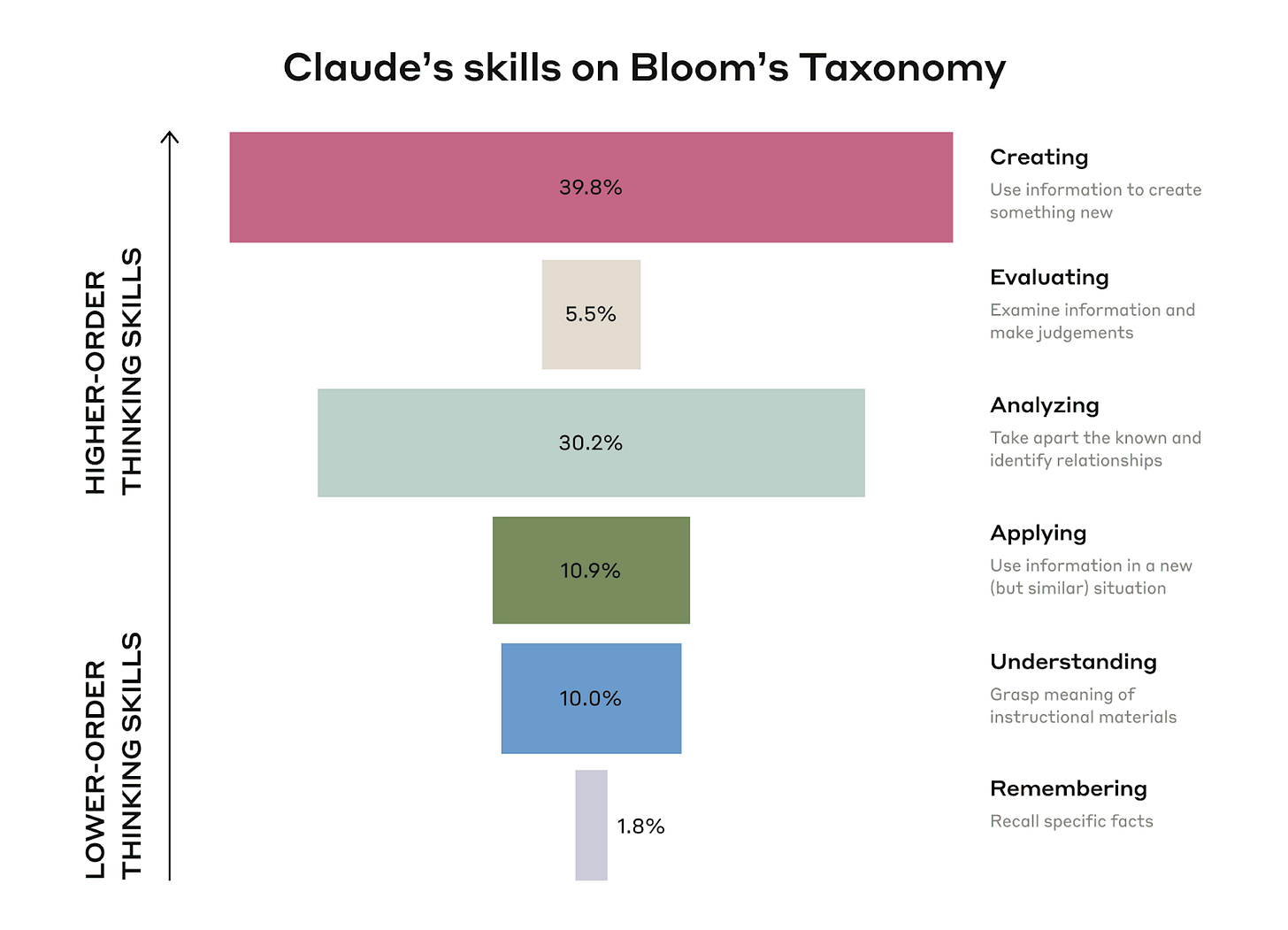

Following up on our commentary of last week when we highlighted that judgement is the key bottleneck to talent moving upward in the age of AI, this week we turn to a common belief that LLM-assisted “cheating” is an existential threat to schooling. A more honest framing of this issue is – the trend of using LLMs to “cheat” is actually a mirror held up to an assessment regime that was already cracked. It is a deadline screaming at our outdated ways of learning. Anthropic’s read of two million Claude chats shows students lean on AI to create (39.8%) and analyze (30.2%) while almost half the time they simply ask for the answer.

Far from worsening students skills, a new paper in Nature, confirms “the positive impacts of ChatGPT on learning performance, learning perception, and higher-order thinking.” The effects measured in this meta-analysis are surprisingly positive. The researchers conclude that when used appropriately, within well-scaffolded, problem-focused courses, chatbots can boost learning outcomes.

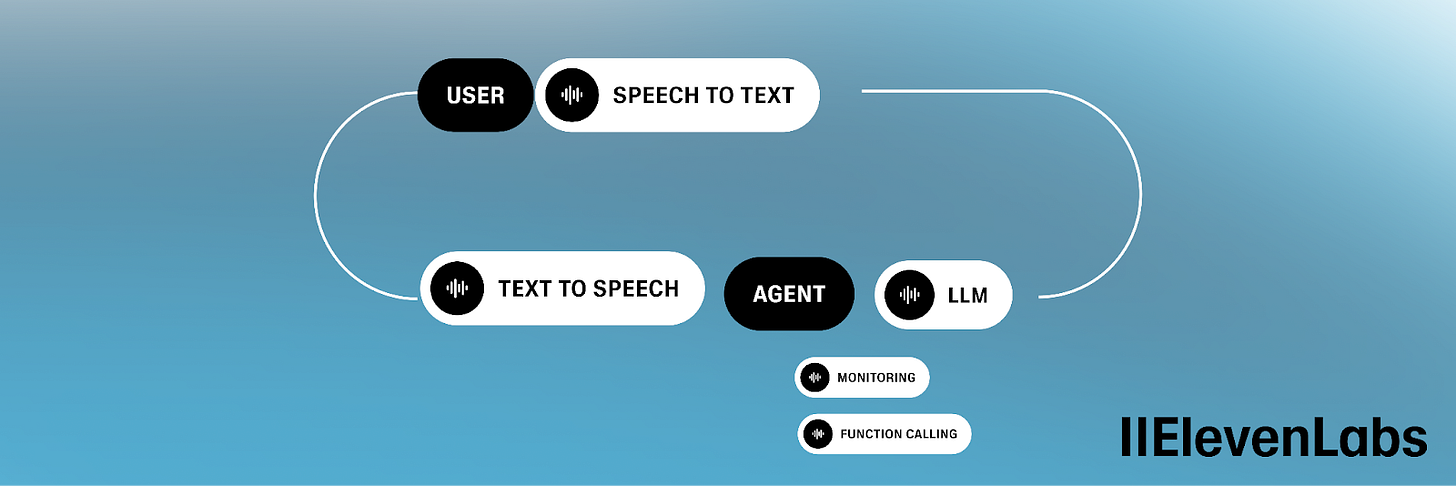

Feedback is the moat

Startups win not by having a moat, but by continuously moating – compounding advantages in a loop faster than anyone else. That’s why OpenAI just paid $3 billion for Windsurf: not to sell more coding assistants, but to control the loop where developers give real feedback on AI-generated code. Windsurf routes user prompts through rival models as well as GPT-4o, then attaches satisfaction scores and follow-up actions. Whoever controls that loop gets the metadata moat that matters – how humans actually debug, refactor and accept suggestions. It’s a reminder of the virtuous cycle Jensen Huang calls “Moore’s law squared”: better models beget better tools, which create better data, which train better models. In that flywheel, inference revenue is secondary. What counts is compounding advantage – and buying a product that sits at the moment of user delight (or frustration) is the cheapest way to pour accelerant on the loop.

Meta is enforcing a moat of a different kind – around your emotional state. On Instagram, Facebook and WhatsApp, LLM-powered bots now offer romantic role-play. It’s sold as mental health support, but the business model is in intention harvesting. In this feedback loop, the product is your confession.

And then there’s Google, on the defensive. Google searches in Safari fell for the first time in 22 years. Alphabet lost $250 billion in market cap. Users are switching to AI-native tools like ChatGPT and Perplexity. I called this in 2020: transformers mark the shift from cuneiform → printing press → web → search → post-search. In a world where knowledge starts with a conversation, who wants to spelunk through SEO rubble? I’d rather ask o3 and get a synthesized answer.

The antitrust trials might decide if Google stays whole. But the user migration already decided something more important: Google no longer owns the feedback loop. The default box in the browser bar has lost its monopoly on curiosity and now it has to earn every single query.

See also:

Big congrats to EV member Fidji Simo on joining OpenAI as new CEO of Applications!

Anthropic hires a top Biden official to lead its new ‘AI for social good team’.

Mistral’s new Medium 3 model rivals leading ‘non-reasoning’ LLMs in intelligence while offering up to an 80% price reduction compared to its predecessor.

President Trump scraps the Biden-era AI diffusion rule, the tiered export curb that was set to bite on May 15.

Elsewhere

A leap in generative biology: scientists showcase the first successful use of generative AI to design synthetic DNA enhancers – molecular “switches” that can precisely control gene expression in specific healthy mammalian cells.

Google’s AI “co-scientist” sifted through existing medicines and pinpointed epigenetic drugs – most notably the cancer drug Vorinostat – that can halt scar-forming signals and even help liver cells regrow. This offers a fresh path to treat liver fibrosis.

Amazon reveals its first robot with a sense of touch.

China, Japan, South Korea and 11 countries of the Association of Southeast Asian Nations have come together around a shared strategy to reduce reliance on the US and deepen regional financial and trade ties.

NVIDIA is working on a modified version of the H20 chip for the Chinese market.

EVs now make up 97% of market share in Norway. These cars are the new energy system, as I discussed with Octopus energy CEO Greg Jackson

By tracking mobile signals and other data, The Economist reveals an unprecedented, sustained build-up inside Putin’s secret arms empire, suggesting Russia can maintain high production levels for a long time.

Microplastics can cross the blood-brain barrier in animals and affect behavior and cognition.

Alchemy! CERN scientists turn lead into gold for a fraction of a second.

Learning physically rewires the brain and how different regions communicate.

Thanks for reading!

Today’s edition is sponsored by Eleven Labs.

ElevenLabs is powering human-like voice agents for customer support, scheduling, education and gaming. With server & client side tools, knowledge bases, dynamic agent instantiation and overrides, plus built-in monitoring, it’s the fastest way to launch conversational experiences. Get started free.

Yes, the existential threat isn't to learning, but to education in it's current form - and I suspect it'll simply struggle to keep up with the skills required. At present, we need skills in prompting and critically thinking about AI output. But as we move through OpenAI's outline roadmap towards level 5 organisational AI, even those skills probably won't be that valuable. Maybe it'll be subtle kind of strategic vibing that humans will uniquely bring. How you train people to do this kind of intuitive reading of situations is perhaps akin to Polynesians navigating across oceans without a compass, but by reading complex signals of currents etc.

I recently posted this elsewhere. Feels like a good fit here as well…