🔮 Sunday edition #541: AI adoption myths. Carbon storage ceilings. Microsoft’s hedge. Nepal, microdrama, 50s ftw++

Hi all,

Welcome to our Sunday edition, where we explore the latest developments, ideas, and questions shaping the exponential economy.

Enjoy the weekend reading!

Azeem

It’s the end of AI as we know it, and I feel fine

AI business adoption is down. Or is it?

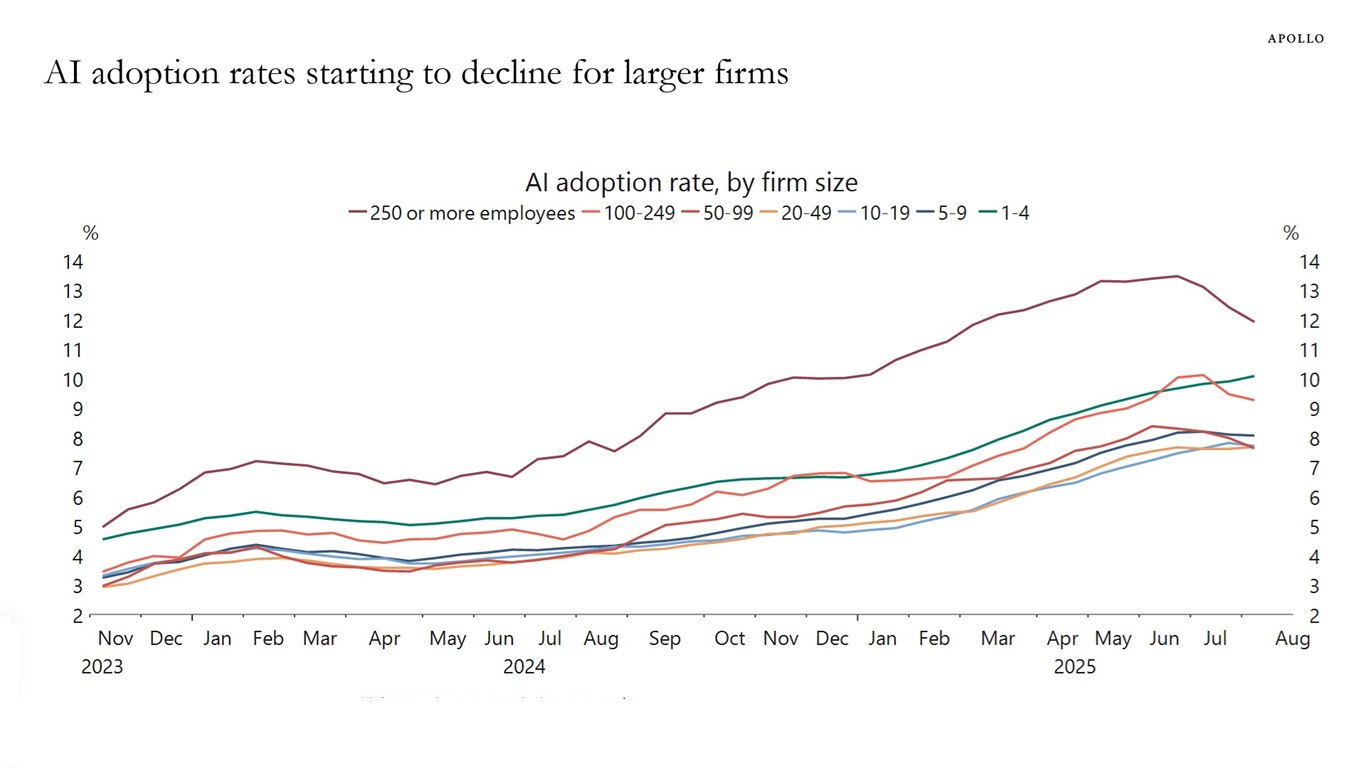

A few weeks ago, the mainstream swooned over a study claiming that 95% of enterprise AI pilots fail to impact P&L. As we pointed out, the methodology was deeply flawed. A similar misreading lurks in the latest US Census Bureau data. At first glance, the survey seems to show that only 12% of large US firms report using AI and that the use is on the decline. But once you break the data down by sector, as Paul Kedrosky has done, most industries show rising adoption (with jagged swings more likely due to sampling error than real-world shifts).

The deeper problem is definitional. The survey footnote lists “machine learning, natural language processing, predictive analytics, image processing, data analytics…” as forms of AI. If you believe only 12% of large companies do any data analytics, you’ll see why I’m sceptical. Misleading numbers like these skew the narrative and fuel headlines about an “AI bubble.” It is the question I hear most often after labour-market fears (funny how countervailing those concerns can be). Next week, we’ll have a major analysis on the AI bubble question. Stay tuned.

In the meantime, see also:

Oracle’s 40% share-price rise this week, partly on hopes of an OpenAI contract worth as much as $300 billion. This might have the scent of over-exuberance, since OpenAI doesn’t have the cash in hand yet.

Hallucinations are the complaint I hear most often from people using AI, although it’s not a problem we trip over very often. OpenAI’s latest research shows that training models to abstain – to say “I don’t know” – can sharply cut hallucinations. There was another worthwhile paper out this week, although a bit more technical, showing how determinism makes AI consistent.

Smol continues to be beautiful

In my annual outlook in January 2024, I forecasted:

Smaller models will be in demand: they are cheaper to run and can operate on a wider array of environments than mega-models. For many organisations, smaller models will be easier to use.