🔮 Sunday edition #533: AI masters math; war scenarios; reinforcement learning critique; Shanghai's robot movers++

Advanced AI capabilities, systemic risks, and infrastructure innovation

Hi all,

Welcome to our Sunday edition, when we take the time to go over the latest developments, thinking and key questions shaping the exponential economy.

Thanks for reading!

Azeem

For the most important themes of the week, check out our daily edition:

Tuesday: The Pentagon goes all-in on AI

Wednesday: US doubles down on data and energy

Thursday: AI’s inner monologue goes public

Friday: OpenAI agents

If you’d rather stick to the weekly edition only, you can change your email preferences to opt-out of the daily cadence.

The Tao of the Turing

A new OpenAI model achieved gold-medal performance in the International Math Olympiad (IMO), the world’s most prestigious math competition. They used a “reasoning LLM that incorporates new experimental general-purpose techniques” and the AI worked under the same time constraints as humans with no access to tools.

The model thinks…. for a long-time, for hours, in fact, according to Noam Brown, an OpenAI researcher. AI progress in math has been much faster than anyone expected, perhaps years faster than we might have estimated only a few years ago.

This matters because the IMO tests creative reasoning beyond rote computation and requires detailed, logical proofs, demanding original arguments. Problem designers intentionally seek “elegant, deceptively simple-looking problems which nevertheless require a great deal of ingenuity.” Is this a system that can start to mimic or exceed expert human creativity in an important domain?

Mathematics is the universal language for describing the physical world with applications across every domain from finance, the economy, climate, physics, engineering, optimization, biology. And, of course, in improving AI systems.

This could be quite the milestone…

Or could it? Terence Tao, the “Mozart of Math” famed for his ability to excel across disciplines, and a measured optimist about the potentials of AI urges caution: “in the absence of a controlled test methodology that was not self‑selected by the competing teams, one should be wary of making apples‑to‑apples comparisons … between such models and the human contestants.”

Is it more a case of quod non erat demonstrandum? Tell me in the comments.

Six paths to a war

A new paper identifies six pathways through which advanced AI might increase the risk of major war.

One of the most dangerous pathways is purely human – if national leaders come to believe that losing the race to AGI would significantly weaken their global standing, militarily or economically, they may take drastic action.

Suppose the US or China believes its rival is nearing a decisive breakthrough; it may be tempted to take preventive action through sabotage, cyberattacks, or even military strikes to delay or derail the competitor’s progress. One of the risks here is that we may not agree on what AGI is or what it looks like; leaders might overreact to vague signs that a rival is close to AGI. Their next step could lead to the point of no return. At the same time, ambiguity about AGI’s exact implications could make them hesitate.

If this reminds you of the history of nuclear deterrence, you’re not wrong.

See also:

Nirit Weiss-Blatt highlights a UK AI Safety Institute paper critiquing current research on AI “scheming” for significant methodological flaws.

Cosmos Institute argue this week that in building AI we must return to fundamental questions about human flourishing, not just engagement metrics – they call for a philosopher builder.

Harry Law urges that we need more productive critiques of AI from academia than calling it a “bullshit generator”.

a ReaLity check

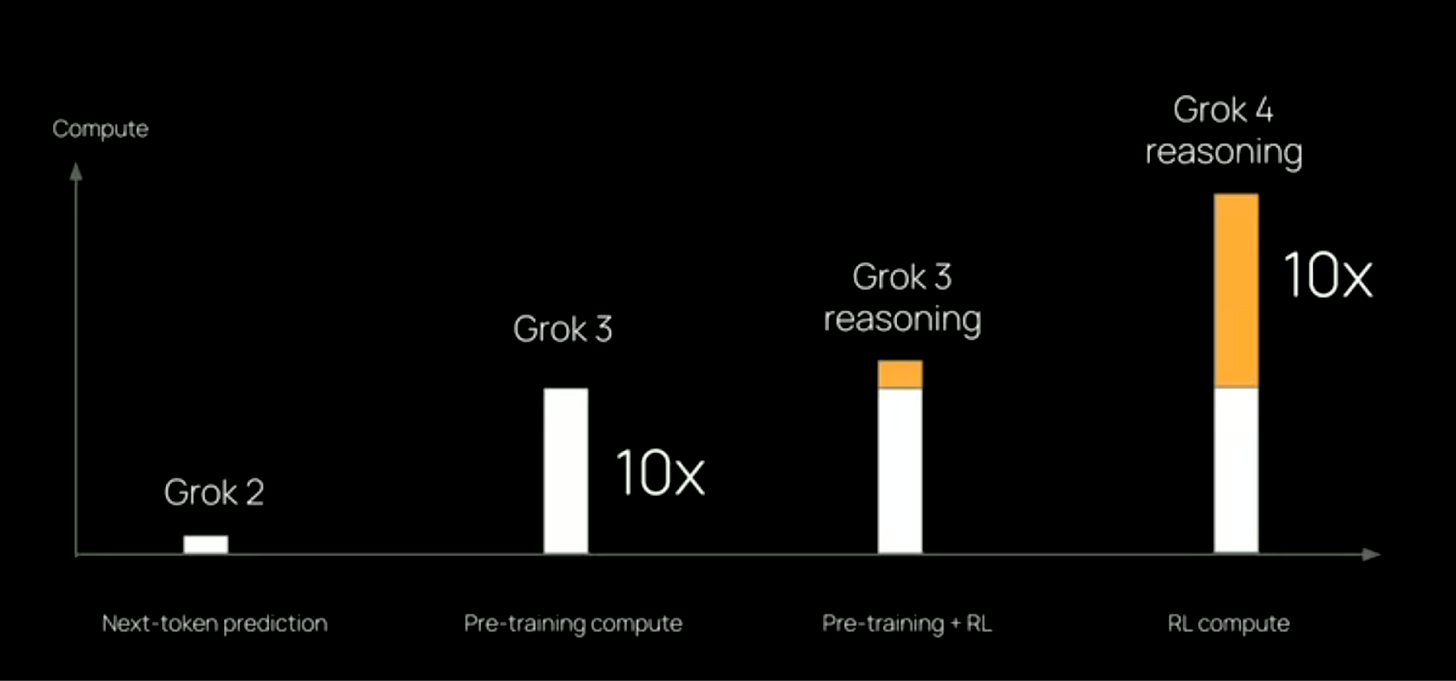

In the past two weeks, both ChatGPT Agent and Grok 4 debuted with heavy use of reinforcement learning to deliver major performance gains over their base models. But, as Andrej Karpathy put it, “[i]t doesn’t feel like the full story.” RL is powerful but it hits diminishing returns as tasks grow longer and more complex. We likely need new learning paradigms to push the frontier.

RL hasn’t yet had its big breakthrough moment, where it suddenly scales up to produce truly general-purpose, flexible agents. But even if RL does improve, there’s a fundamental limitation: it only learns from outcomes (“did this work or not?”) rather than from the process itself.