🔮 Sunday edition #525: Integrating agents; token crunch; China’s EV victory; flame-throwing robots, big brains & Claude controls++

An insider’s guide to AI and exponential technologies

Hi, it’s Azeem.

This week, we explore the shift from raw AI horsepower to systemic integration. Models are being wired into feedback loops, infrastructure and ecosystems. From Claude 4’s autonomous coding sprints to the rise of open agent protocols, the “agentic web” is no longer a theory – it’s being built. Across sectors, the race is on to operationalize AI.

Let’s go!

The agentic web is arriving

2025 was billed as the year of AI agents and in many ways it has arrived. Google, OpenAI and Anthropic ship agents that code on-demand and fetch citations in minutes. Codex can spin up a bug fix faster than you can draft a ticket. But the question is no longer how smart they are – it’s whether they can run unattended across systems. Today, they can’t - even a 1% hallucination rate can unravel a long task chain.

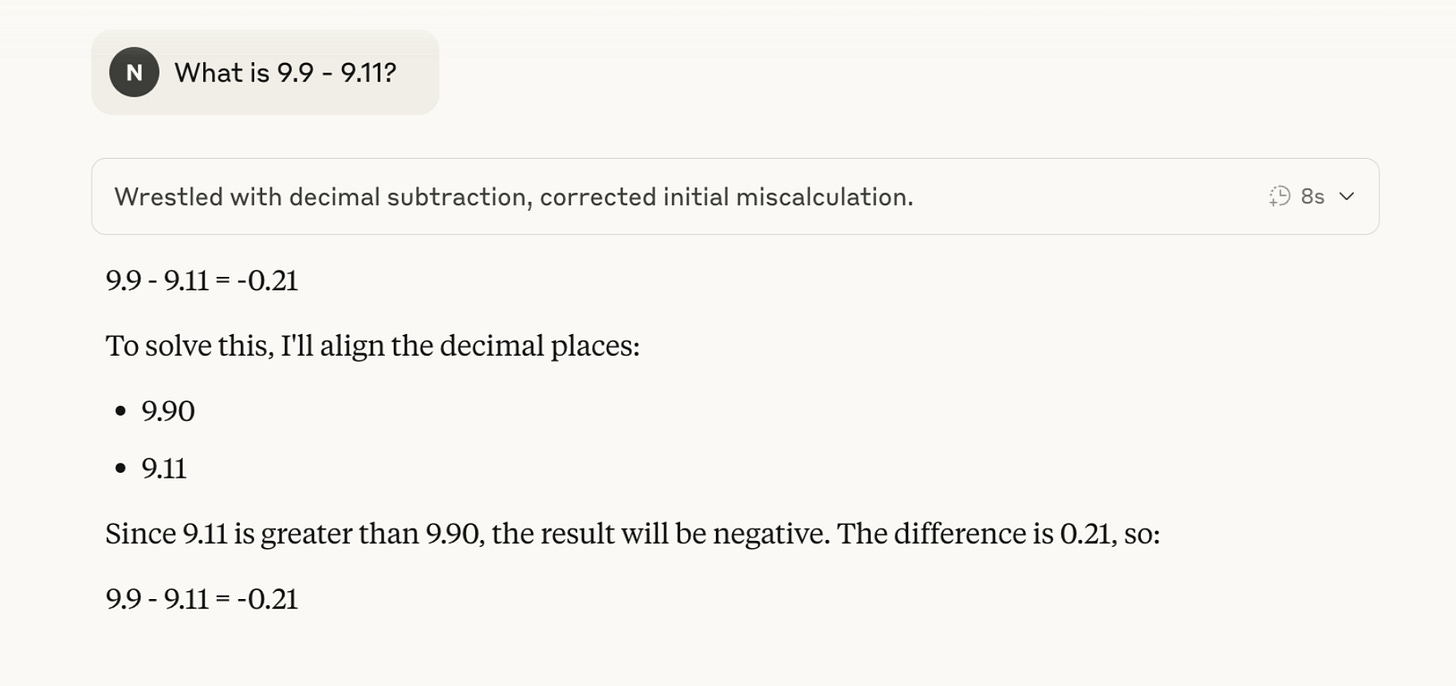

Still, progress is unmistakable. Rakuten, a Japanese tech conglomerate, let Claude 4 refactor code for seven hours with zero intervention. Each win is still shadowed by lethal slips – Claude 4, for all its impressiveness, still bungled “What’s 9.9 minus 9.11” – proof that 1 percent error still matters.

Microsoft CTO Kevin Scott calls this build-out the “agentic web,” a mesh of AIs working through shared protocols. Apple’s forthcoming Intelligence SDK will hand those sockets to every developer, and emerging standards – MCP for tools, Agent2Agent for AI-to-AI chat – are becoming the thread tape.

The pipes are going in, the journeyman is still on probation, but every new coupling cuts leaks and gets us closer to “hands-free” AI.

See also:

Sam Altman and Jony Ive’s AI device collaboration targets late 2025 – will new interfaces push agent potential further?

Google unveiled Veo 3, an AI model that generates talking video. Its realism adds to worries about a flood of synthetic media.

Ravenous reasoning agents

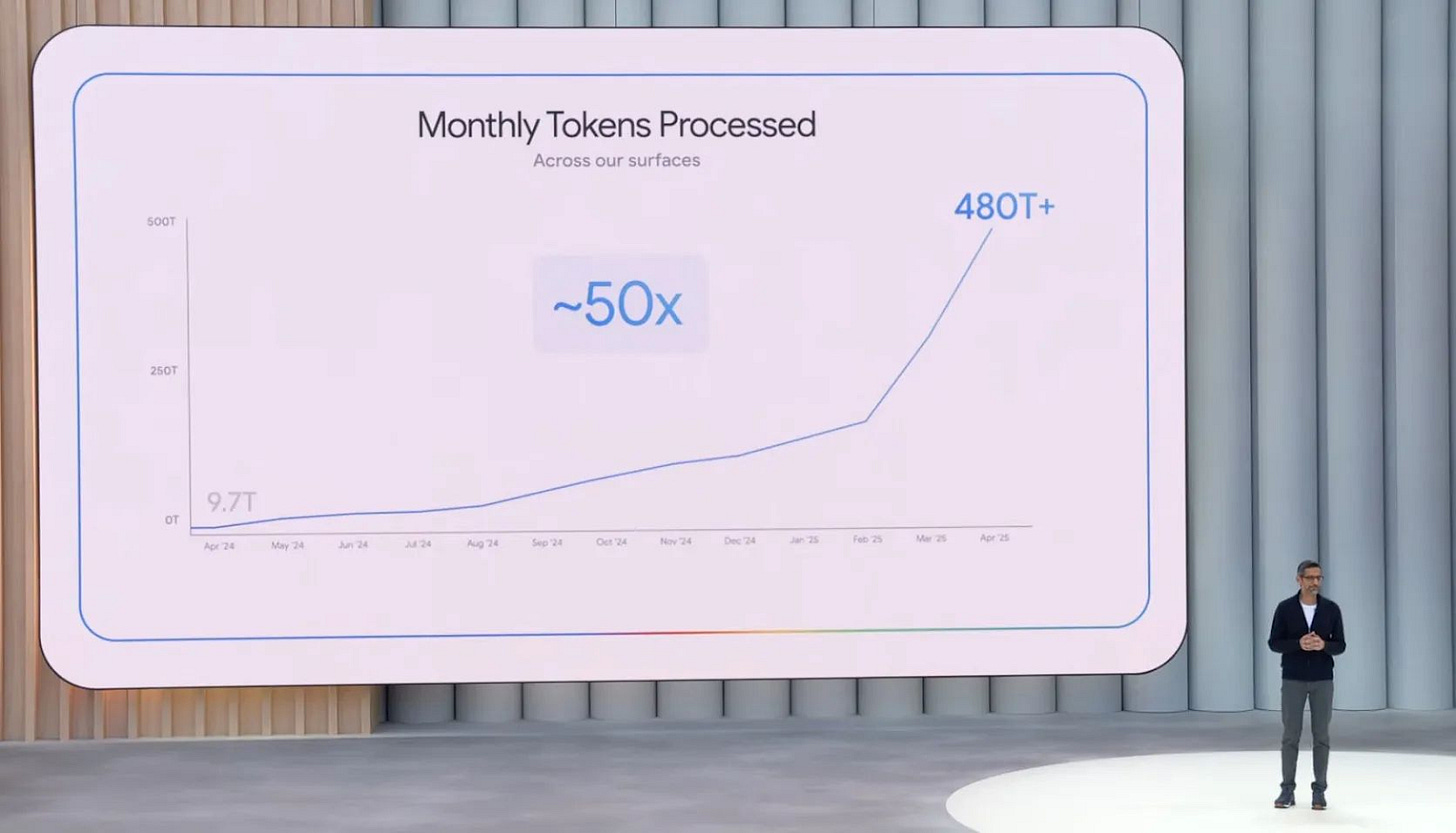

Google’s inference load soared 50-fold in just 12 months, from 10 trillion tokens in April 2024 to 480 trillion in April 2025. More users played a role, but the bigger driver is the rise of reasoning models, which consume about 17 times as many tokens as predecessors because they run long internal chains of thought.

This exponential growth will continue as agentic workloads become more commonplace.