🔥 Beyond human: OpenAI’s o3 wake up call

A reasoning breakthrough arrives months - not years - after its predecessor. Here's why we need to talk

Last night, OpenAI previewed a new reasoning model, o3. I feel it’s important enough to share some quick thoughts about it.

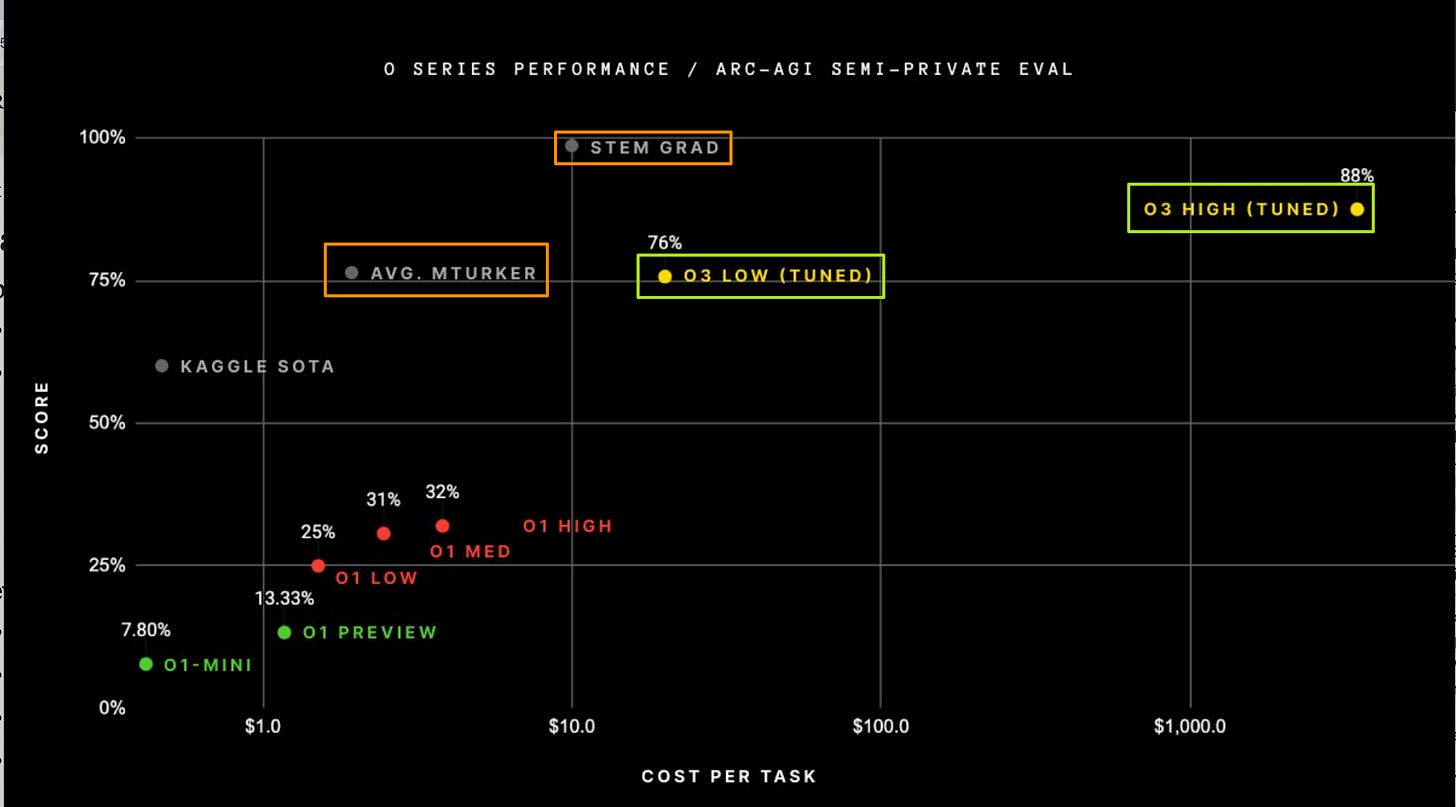

Basic facts: The new model is good at reasoning in mathematical and programming domains. It scores really well on a particular benchmark called ARC-AGI, which is above the average human. Spend more money on it, up to $3,500 per task, and it approaches the performance of a STEM graduate.

The ARC-AGI benchmark has been very tough for machines. At the start of the year, the best they could do was 5%, but in September, they got to 32% and now, just three months later, to this 88% score. (The crazier version of this chart is at the foot of this letter.)

On maths, o3’s score is “incredibly impressive” according to one researcher. It scores 25% on a “brutally difficult benchmark with problems that would stump many mathematicians.” Early this year, maths guru Terence Tao said that benchmark was “extremely challenging [and] resist AIs for several years at least.”

In coding, O3’s benchmark score would place it 175th on the leaderboard of Codeforces, one of the toughest competitive coding tournaments in the world. In other words, it is better than virtually every human coder.

#1 Things went fast

o3 has arrived just a couple of months after o1. This marks a dramatic shift from large language models' 18-24-month development cycles. Unlike large language models, which need the huge months-long industrial process of training bigger and bigger systems on more and more data, systems like o3 improve their performance at the point where the query is asked (aka inference time).

My conclusion is that we could continue to see performance improvements quite quickly.

#2 The economics will improve

The best results came from spending $3,500 for each query, which is the cost of computing, searching, and evaluating possible answers at inference time. Early versions are often expensive, but we can assume that the performance we get at $3,500 will cost us substantially less, perhaps a dollar or two, within no more than a couple of years.

The cost of GPT4 quality results has declined by more than 99% in the last two years. GPT-4 launched in March 2023 at $36 per million tokens. Today, China’s DeepSeek offers similar performance for $0.14, or 250 times cheaper.

Lower cost means more use.

#3 Push the frontiers of science and engineering

What constraint does expert-level human maths or coding alleviate? The most obvious place would be in science and engineering. These tools would allow experts to expand their capabilities, pursue more avenues more rapidly, and test and iterate faster. After all, we don’t have an unending surplus of top-class coders and mathematicians.

At some point, the ongoing improvement of these tools could give us access to conceptual problem spaces we can’t access without them. This is the case with many complex fields, especially mathematics, which are far removed from everyday experience and direct observation. Consider, for example, category theory, homology, and higher-dimensional algebra.

Keep reading with a 7-day free trial

Subscribe to Exponential View to keep reading this post and get 7 days of free access to the full post archives.