🔮 Azeem's commentary: On the generative wave (Part 1)

Developer uptake has been impressive

There has been an explosion of services in the field of generative AI. These systems, typically using large language models at their core, are expensive to build and train but much cheaper to operate.

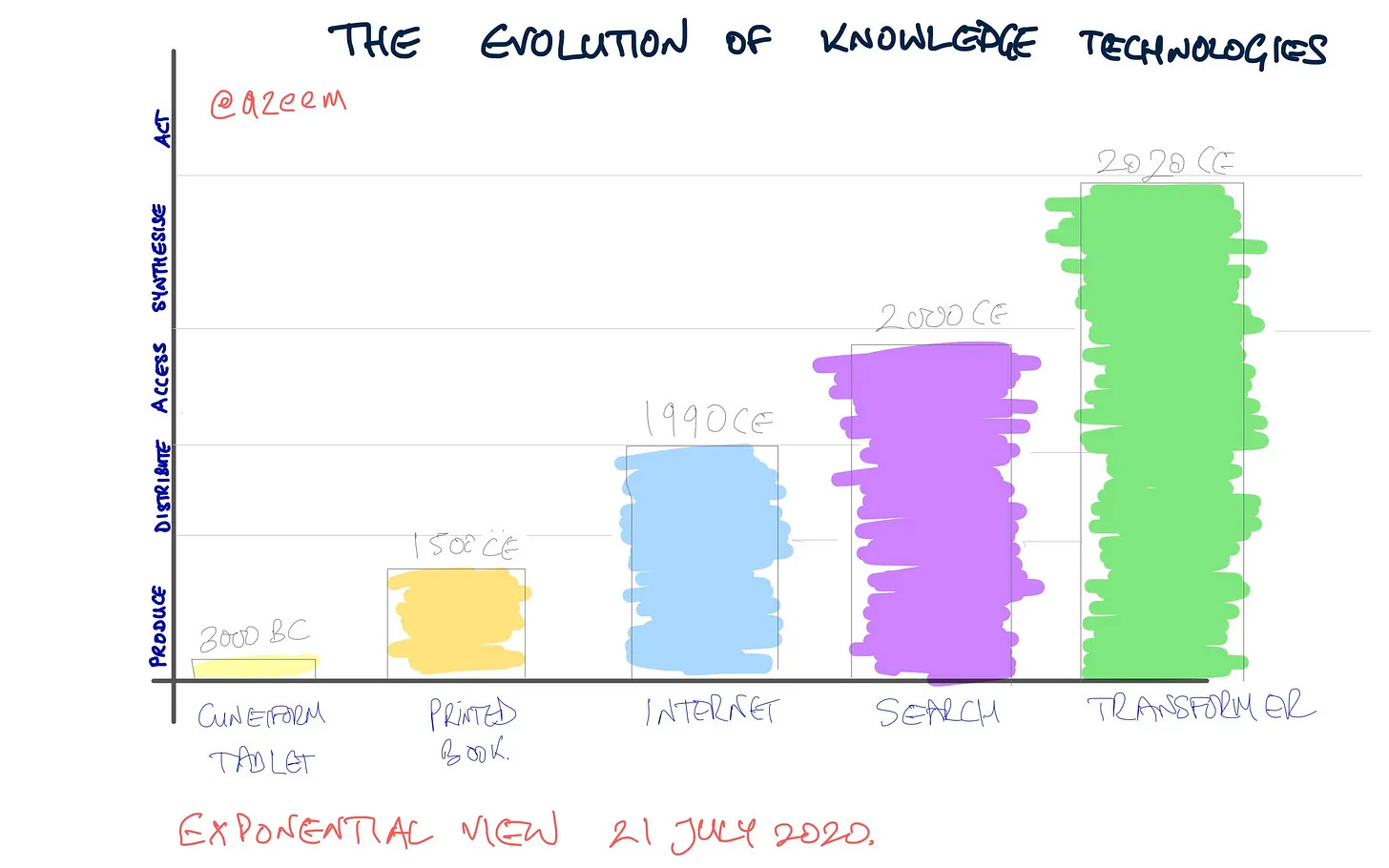

I want to historicise this trend. Back in July 2020, I wrote that large language models—I refer to them as transformers back then, like GPT-3–are “capable of synthesising the information and presenting it in a near usable form.” They represented an improvement over previous knowledge technologies, because they would present synthesised information rather than disparate search queries.

In my simplistic framing, transformers were about synthesis. I studiously avoided defining what I meant by synthesis, but 30 months later, it’s time for me to refine that model. I reckoned that I was trying to suggest “synthesis” meant responses to a query that could be drawn from many different sources, as we were seeing with GPT-3 over text. But what I missed was the power of what these large models could do. I didn’t pick up how quickly they would become multimodal, across text, images and audio; how they might be capable of de novo synthesis; how verticalisation would make them more powerful within specific domains.

Let’s go over these.

Search

I’ve been playing around with two “search” style services: Metaphor, a general search, and Elicit, for academic research. Metaphor is a bit weird. I haven’t been able to write good queries for it, but I have found it helpful in surfacing useful results even in topics that I know something about. (See this search on “technology transitions”. Log in required.)

Elicit is really impressive. It searches academic papers, providing summary abstracts as well as structured analyses of papers. For example, it tries to identify the outcomes analysed in the paper or the conflicts of interest of the authors, as well as easily tracks citations. (See a similar search on “technology transitions”. Log in required.)

But I have nerdy research needs for my work.

For my common everyday search terms, like “helium network stats” , “water flosser reviews”, “best alternative to nest”, “put a VPN on a separate SSID at home”, “canada population pyramid”, Google still works pretty well. I haven’t quite been able to figure out how to use Metaphor to replace my Google searching.

It feels a bit like the Dvorak keyboard. Better than QWERTY but QWERTY had the lock-in and we use QWERT today. Metaphor may be better the Google but I can’t yet grok it.

My sense is that Elicit’s focus on a use case makes more sense.

Cross-domain

While GPT-3 showed text generation capabilities, we’re still getting used to cross-modal tools.

Text-to-image is now commonplace. But Google and others have already shown off text-to-video: type in a prompt and get a short movie.

Generative approaches are now finding their way into molecular design. Researchers at Illinois University have prototyped a system to translate between molecular structure and natural language. One could imagine a system being able to generate molecules that match really specific requirements. (“Give me a molecule that is translucent in its solid form and smells of mint.”)